High-Resolution Audio Systems Go Beyond Perceptions

We all are very familiar with audio in our lives. We use a variety of players in our homes, cars, and personal devices to listen to our favorite tunes when we are relaxing or on the go. We have grown up with music as a big part of our lives, and we bond with friends and family over the choices of music that we share, dance to, and sing along with.

But audio technology has undergone quite a transformation. Many people alive today have witnessed this ubiquitous technology undergo major improvements in quality, convenience, and popularity.

Back When

While earlier audio capture technology existed, the vinyl disc was the first widely adopted mainstay for over a generation. It provided relatively simple procedures for capturing audio and had limited playback bandwidth, volume, clarity, and resolution. The usable bandwidth was mostly determined by the rotational speed, which even varied from country to country based on synchronous motors and power line frequencies. It was even somewhat transportable, as we can see from the early recording of Chief Blackfoot in 1916 (see Fig. 1).

Fig. 1: Early vinyl analog record and playback technologies predates microphones, amplifiers and motors. Source: Wikipedia

The fidelity of records was related to rotational speed and was mostly available at 78, 45, and 33 1/3 RPM. Before vinyl, several other materials were used, such as shellac, glass, and aluminum, but vinyl became the dominant form for the masses. (An interesting note: Vinyl was initially touted as unbreakable.)

While vinyl records allowed reproduction of recorded monophonic audio, they brought some audio-quality issues, such as pops, hissing, and scratches, that could cause the recorded audio to skip. But, vinyl discs were easy to buy, store, and use, and players were relatively cheap, giving real recorded music to the masses for the first time.

Early vinyl systems were mechanical in nature, not electronic. The earliest were motorless momentum-based steady RPM rotators, and there were no microphones, no transistors, no tubes, and no amplifiers. The development and growth of electronics changed this forever.

Tape, the Next Generation

Magnetic tape changed the recording industry in a monumental way. Composed of cellulose coated with iron oxides, magnetic tape moving across a recording head created a recording that could be erased and re-recorded. Tape was used almost exclusively to capture and record professional music and audio for many decades and is still used today by the pure-of-heart analog audiophiles.

But tape introduced errors as well. Tape had wow and flutter problems associated with the stretching and compressing of the somewhat elastic medium. In addition, fidelity was limited by the tape transport speeds, which were measured as inches per second. However, tape allowed some innovations such as stereo, dubbing, and multi-track.

Early reel-to-reel systems dominated and continued to dominate the professional audio world. But for the masses, cassettes and eight-track systems were introduced. These were easier to use, inexpensive, and could feature a record tab that would not allow something to be re-recorded. Still, fidelity was limited to tape speeds and eventually higher oersted rating on the DAT tape allowed capturing very high bandwidths and playback of even higher-frequency waveforms.

Better by Bits

The digital age ushered in a new era for recording when A/D converters could be used to capture waveforms, and D/A converters could be used to recreate them. Using microphones, filters, and microcontrollers, audio could be sampled and stored without any moving parts (except the diaphragm on the microphone and the speakers).

Digital recording thus eliminated the mechanically induced wow and flutter distortions of tapes, as well as the pop and hiss distortions associated with the physical medium defects of vinyl. But as with any technology, there are trade-offs here as well.

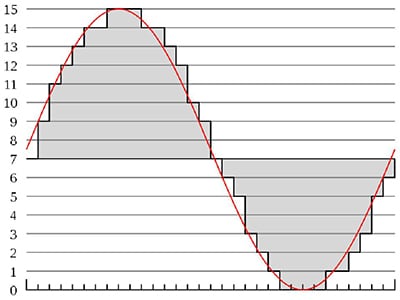

Instead of mechanical speeds being the limiting factor, frequency resolution depends on sample rates, and dynamic range depends on bus width. Standard sample rates have evolved for a variety of applications (See Table 1). Sample rates directly affect the reproduced accuracy of the waveform. In addition, the digital nature of the D/A waveforms creates a staircase shape with very sharp fundamental frequency transitions that introduce distortion (see Fig. 2). Special filtering can reduce this.

Fig. 2: While anti-aliasing filters clean up the A/D input side, filtering is also needed on the output D/A side to compensate for the sharp staircase transitions that introduce out-of-band noise and distortion. Source: Wikipedia

Input data captured from synchronous digital sampling also introduces distortion related to the sample rate. Nyquist sampling theorems dictate that the sample rate must be at least twice the frequency of any signal you want to capture and/or reproduce. Any periodic waveform has an upward voltage swing and a corresponding negative voltage swing. In order to capture a somewhat reasonable facsimile thereof, you need to sample at the high point and at the low point to pick up the entire voltage swing. As you can imagine, if your sample timeslot is not synchronized with the peaks and troughs of your maximum frequency, you will not get a true representation of the analog waveform in the digital domain. This is why faster sample rates can improve the accuracies of the captured data.

Audio aficionados can discern the bandwidth limiting effects of under-sampling, and make the argument that the richness of the harmonics present at the actual waveforms are missing when sample rates are too low, even if they satisfy the Nyquist requirements. That is why higher sampling rates can push these limitations well into the ultrasonic region, making them physically inaudible losses.

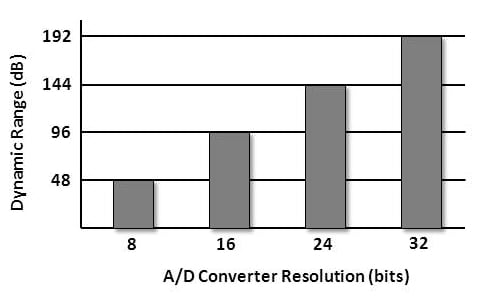

Because of the advancements in semiconductor technology, smaller geometries form wider A/Ds and D/As that can run at much faster speeds. It is not uncommon to use sample rates that are five times higher than the Nyquist limitations, leading to better capture and reproduced waveforms as well as higher dynamic ranges. Sample widths of 32 bits or more are not uncommon, and the wider the sample width, the higher the dynamic range (See Fig. 3).

Fig. 3: The Dynamic range is the loudness step resolution and is directly affected by the resolution of the A/D converters. Source: Soundbooth CS4/CS5 / Digital audio

The architectures of the A/Ds and D/As have a lot to do with it too. Early low-resolution A/Ds could use flash converter technologies to provide fairly fast samples at 8 bits or so. Since each additional bit of resolution doubles the number of circuit elements needed, this approach ran out of steam with early large-geometry semiconductors.

As semiconductor speeds increased, successive-approximation architectures using sample and holds could provide wider bus width conversions within the audio ranges. As geometries shrank, it became feasible again to use flash converters to achieve 10-, 12-, 16-, and 18-bit resolutions. Modern 24-bit flavors can use a variety of new and improved architectures including over-sampling, and 192K samples/sec has become the new audio high-end standard.

Another factor that affects quality of audio from input to output is the type of CODEC implemented within the digital pipeline. A CODEC (for COmpresor-DECompressor) encodes the data stream for transmission or storage using compression techniques that can either be lossy or lossless. As you may guess, lossy CODECs are more efficient but will not recreate the initial waveforms 'exactly.'

Several free and licensed CODECs are used for different purposes. This includes non-compressed transmission schemes like: Pulse Code Modulation, Pulse Density Modulation, Direct Stream, and Pulse Amplitude Modulation, to name a few. Lossy compression techniques like Adaptive Differential, Adaptive Rate Optimized, Adaptive Transform Acoustic, and Dolby Digital help reduce data bandwidth and storage requirements, and do a pretty good job of recreating the initial waveform.

Several lossless techniques have also proven to be quite effective. This includes popular standards like MP3HD, MPEG4, RealAudio Loss-less, True Audio, and WavePack to name a few. More recently, the Free Lossless Audio CODEC (FLAC) and Apple's version-the Apple Lossless Audio CODEC (ALAC) have been gaining acceptance and attention. As you would imply, ALAC is Apple's version for use with Apple devices and services, and FLAC is an open-source free-for-use format. They are both lossless, and are claimed to sound identical even though they use different techniques to compress the data. It is said that FLAC can compress a little better because you have some control over the compression parameters.

You may realize by now, that a stable standard performed in software is an ideal target for hardware implementation. CODECs are no exception. The ability to take a process intensive task and make it part of a pipelined data stream helps reduce chip size, processor performance requirements, battery use and costs.

In addition, advancements with on-chip dielectric isolation have allowed better quality mixed signal devices to include special filtering in the same sampling and playback devices. On-chip signal paths took advantage of differential signal paths to improve quality. Later, digital signal processing manipulated analog information in the digital domain, opening a new avenue of signal-processing capabilities and features.

Of key benefit are the multichannel capabilities of modern high-resolution A/D and D/A converters. Mere stereo is no longer good enough for modern surround sound and subwoofer-based audio systems that are high on the "wow factor" index, and audio systems are one of those technologies people love to show off.

This permits highly dense systems on a chip that can sample as well as playback multiple channels simultaneously while providing filtration balance, faders, spectral equalization and more. In addition, these features are not just niceties, but insisted upon by the modern standards and the customer base.

Where to Start

As with any top-down design, your target application will dictate the types of technologies and devices you will be able to use. We all have constraints imposed upon us when actually doing the design, and high-end audio is no exception.

High-end audio systems span a large range from small, handheld, low-power, high-performance players and recorders, to several-dozen-channel high-end studio and broadcast panels and racks of equipment. While discrete A/Ds and D/As are available for special-purpose designs, most solutions today are more highly integrated, consisting of either dedicated devices that perform exact functions or peripherals to a controlling embedded micro.

This means the parts we have available to us will be fairly integrated devices with some features we need, and often some we don't. The key is to choose parts that have the features and benefits for your design and aren't higher-cost and more-complex overkill.

Simple and effective are the Cirrus Logic CS5343 and CS5344 parts, which are straightforward 24-bit A/D converters with basic filtering and serialization of data for use by successive digital audio processors (see Fig. 4). Supporting sample rates up to 108 kHz per channel, these parts feature digital filters and high-impedance inputs eliminating the needs for external op amps and anti-aliasing filters.

Fig. 4: High impedance, on-chip filtration, and digital serialization make this simple and elegant high-resolution A/D converter for digital audio applications an ideal choice for a high-end audio system.

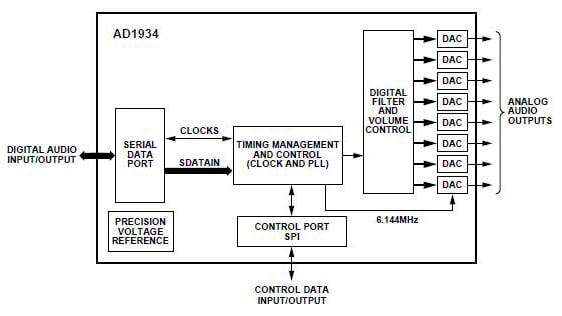

On the D/A output side of things, we also have both simpler and targeted output-only devices as well as more highly functional and integrated parts. A good example of a simple but effective output-only part is the Analog Devices AD1934, which provides eight single-ended D/A outputs and takes advantage of the company's patented multibit sigma delta architecture (see Fig. 5). It uses the same PLL clocking techniques as the AD1974YSTZ to reduce EMI and eliminate the need for a second clock and is also a 3.3-V part (with 5-V-tolerant I/O).

Fig.5: The same scalable independent peripheral functionality is useful when generating high-quality audio outputs. The integration of filters and volume controls saves space and external components, and the audio architecture has changed to analog on just the edges.

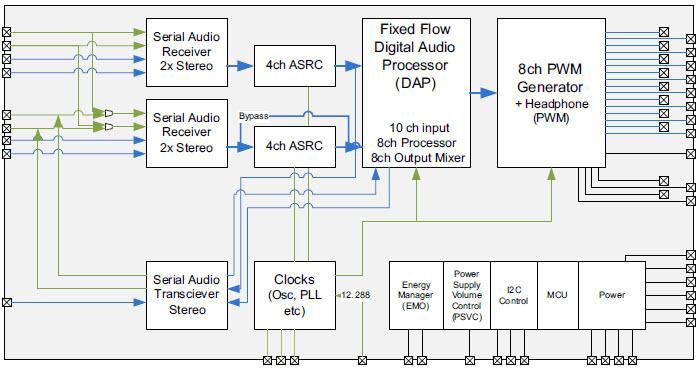

Higher functional density, if needed, can take advantage of the highly integrated output processors like the TI eight-channel TAS5548 high-definition audio processors (see Fig. 6). This flexible part takes serial audio in and performs output processing and drive functions in a flexible high-performance way.

Fig. 6: Maximum functionality can be squeezed into a single device, which targets multichannel audio for advanced applications. Embedded MCUs bring features like dynamic power control and energy management as well as bass and treble control over IIC.

This part can function as a sample rate converter and has the ability to interface seamlessly with most digital audio decoders according to TI. Another key compatibility is with the DTS-HD specs for Blu-ray HtiB designs.

Architecturally, two separate input modules handle four channels each allowing simultaneous interfacing to potentially two different sample rates. The flexible output stages can drive eight channels of H-Bridge power stages in either single-ended or Bridge-Tied-Load configurations.

These parts are particularly well suited for digital class D amplifiers since they use a 384-kHz switching rates for the 48-, 96-, and 192-kHz sample rates. These support the AD, DB, and Ternary Modulation techniques. The 8x oversampling combined with the fourth-order filtration provides a flat noise floor from 20 Hz up to 32 kHz.

Digital filtrations and DSP techniques also allow mix and shape functions, such as bass and treble tone controls and selectable corner frequencies. Note: This part is also peripheral-controlled and accessed over the IIC port.

Down the Road

To support audio designs, the modern mixed signal highly integrated approach simplifies a lot of the process. No longer are differential PCB signal pairs, analog ground planes, and copper fill needed to make quiet and effective circuit boards. Modern highly integrated and modular functions absorb a lot of external components and keep boards tight.

Expect to see more highly integrated and concentrated high end audio systems for field recording, mixing, mastering, and even built into the next generation of instruments. Digital interfaces are replacing analog controls for sliders, pots, meters, and so on. No longer will 16, 24, and 48 channel mixing boards be the preferred way to master. With software driven control and DSP functionality, the key is to capture the raw audio and let processing power be the preferred way to tweak the sound. This brings high end audio into everyone's reach.

What can you expect today? Until content and players are available in high-resolution formats, you are just converting and playing high-resolution files on a low-resolutions system, or low-resolutions files on a high-resolution system. There is really no benefit, but times are changing.

With Sony announcing that it will take high-resolution audio to new places, people are paying more attention to content, format, services, standardization, and interoperability. Of course, Sony is well-positioned with its HAP-Z1ES player with 1 TB of memory. Both wireless and wired (USB) connectivity can expand this, and the HAP-Z1ES player supports all formats to date and rates up to 192 KHz.

On the cloud side, you can expect to see more high-resolution servers and services such as hiresaudio.com. The gears are just starting to mesh, and it is an exciting time to get into high-resolutions audio.

Table 1 - Standard Sample Rates and Frequency Ranges for Various Applications

| Quality Level | Sample Rate | Frequency Range |

| Poor AM Radio | 11,025 Hz | 0 - 5,512 Hz |

| Near FM Radio | 22,050 Hz | 0 - 11,025 Hz |

| Standard Broadcast Rate | 32,000 Hz | 0 - 16,000 Hz |

| CD/MP3 | 44,100 Hz | 0 - 22,050 Hz |

| Standard DVD | 48,000 Hz | 0 - 24,000 Hz |

| High-End DVD | 96,000 Hz | 0 - 48,000 Hz |

| High-Resolution Audio | 192,000 Hz | 0 - 96,000 Hz* |

*This is the maximum Nyqyuist frequency reproducible using a 192 KHz sample rate even if speakers and amplifiers are unable to reproduce it. Source: Soundbooth CS4/CS5 / Digital audio