Making Sensor Technology Less Blurry

Image Source: gece33/GettyImages

By Paul Golata, Mouser Electronics

Published June 14, 2022

Many people wear glasses because they do not see too well. Without their glasses on when driving, they are unable to see things clearly, such as stop signs. While driving we all want to avoid going through an intersection and getting hit by another driver. One should always wear their glasses when they drive so they can best recognize the hazards on the road ahead (Figure 1).

Figure 1: A pair of glasses on an eyechart. (Source: flaviuz/Stock.Adobe.com)

Having the ability to detect and identify an object is at the heart of nearly every vision application. However, there are almost as many ways to accomplish this as there are reasons for doing it. This article will look at several standard sensors used to detect objects and their trade-offs.

Seeing Provides Spatial Awareness

Putting aside for the moment that optical illusions do in fact exist, the ancient proverbial wisdom is that “seeing is believing.” This means one generally desires to see something experientially prior to one’s subjective acceptance that it really exists or occurs. Electronic systems benefit from sensory inputs to help them understand and adapt to changes in their environmental surroundings. Applications including both self-driving vehicles and robotics (robots and cobots, etc.) obtain spatial awareness by incorporating different electronic technology systems that enable them to understand where they are and how they might best respond to achieve their programmed objectives and goals.

Let’s eyeball the application as it pertains to driving. Take the system known as advanced driver-assistance systems (ADAS). ADAS provides a safe human-machine interface (HMI) to increase car and road safety. ADAS sensing systems must be able to handle a wide context of velocities, unforeseen events, variable weather, and environmental situations. In the case of vehicles, environmental sensing is performed through three (3) major technologies

Cameras

Most people know that Hollywood is full of cameras. Hollywood cameras are positioned strategically to capture every part of the scene the director wants to tell the story. Your own camera cell phone has made you a director. It allows you to capture things you are experiencing. Often you only get one opportunity to get it before it is gone forever.

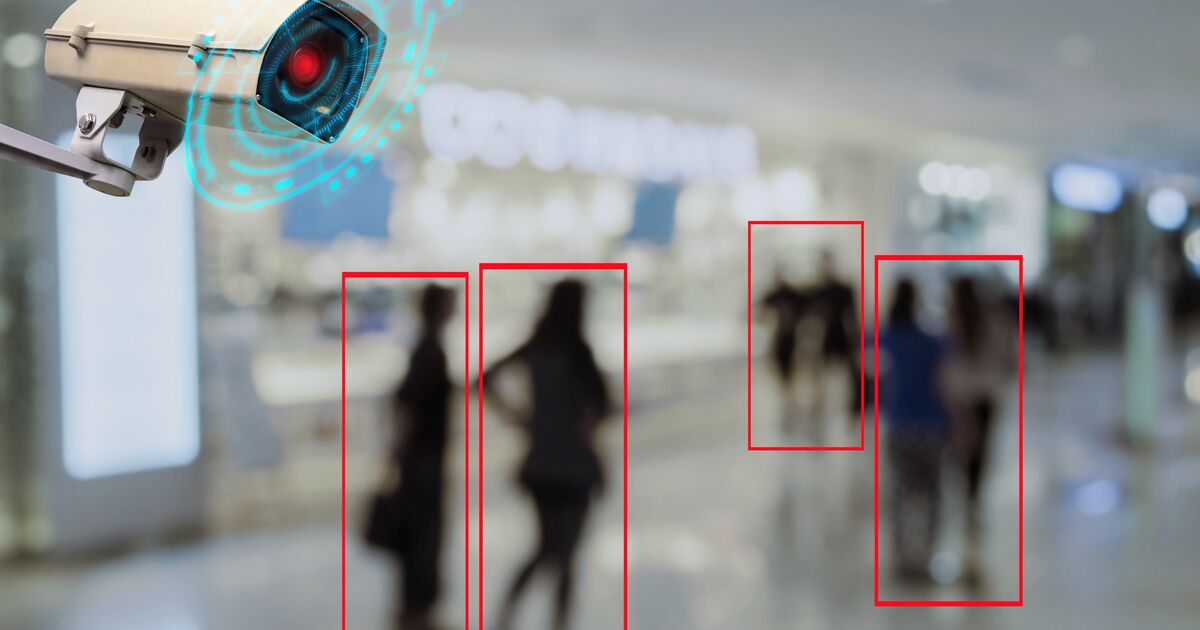

In vision applications, cameras may get as one part of a large electronic vision system. They are utilized in an analogous way to that of human eyesight (Figure 2). There are several pros and cons to using cameras which may include:

Figure 2: Indoor security camera and motion detection system. (Source: Vittaya_25/Stock.Adobe.com)

Pros

- May be positioned to “see” the whole entire context, or at least what is deemed sufficiently appropriate

- Potential to “outsee” humans in various contexts

- Through the application of invisible light sources, that is light sources outside of the roughly 380nm–750nm spectrum that the human eye responds to

- May employ learning via

- artificial intelligence (AI)

- machine learning (ML)

- neural networks (NN)

- Often less costly

- Handles weather contexts somewhat analogically equivalent to human eyesight

Cons

- Requires higher computational power

- Limited vision in contexts where human eyesight is also limited or obscured

An Example

Basler ace 2 Cameras feature cost-effective software integration and a top price-performance ratio. These cameras include an optimized hardware design with status LED on the back, improved mount, removable IR cut filter, and robust M8 connector. The ace 2 cameras combine Sony’s Pregius S sensors with up to 24MP in compact format with C-mount and global shutter. These cameras include models with Sony sensors IMX392, IMX334/IMX334ROI, IMX540, IMX541, and IMX542 with resolutions from 2.3MP to 24MP. The ace 2 cameras are available in ace 2 Basic and ace 2 Pro product lines that are tailored for different vision needs. The ace 2 cameras are available in mono and color options and include a GigE or USB 3.0 interface.

Radar

As electronic engineers, many of us are aware that there are other sensing technologies that allow us to “see” what is not normally visible to the human eye. One example of this is radar. Radar is an acronym for radio detection and ranging (Figure 3). Radar employs radio waves (3MHz–110GHz) to help ascertain the distance (ranging), angle, or velocity of objects.

Many of us are familiar with radar examples from how aviation is tracked as they fly across the skies. It provides a way to “see” where the airplane is at. When looking at applications in our current purview (vehicles and robotics) often mmWave (30–300GHz) is employed. There are several pros and cons to using radar which may include:

Figure 3: Radar. (Source: your123/Stock.Adobe.com)

Pros

- Small package size and antenna

- Large bandwidth

- High doppler frequency

- High levels of integration

- Reliable

- Affordable

Cons

- Not as precise as LiDAR

mmWave Sensors

Texas Instruments mmWave Sensors offer both Industrial and Automotive options. Sensing is simplified using the mmWave SDK to leverage and evaluate sensing projects in less than thirty minutes. Spatial and velocity resolution can detect up to three times higher than traditional solutions. CMOS single-chip sensors shrink design size by integrating an RF front-end with a DSP and MCU.

AWR

The AWR Series of Automotive mmWave Sensors enhance driving experiences, making them safer and easier by analyzing as well as reacting to the nearby environment.

- Front long-range radar: Detect motorcycles, cars, or other dynamic objects at less than 1-degree angular accuracy and up to 300km/hour

- Multi-mode radar: Detect complex urban scenes with run-time configurability and interference detection capability

- Short-range radar: Detect motorcycles, cars, and other dynamic objects at high accuracy of distances up to eighty meters

IWR

The IWR Series of Industrial mmWave Sensors provide unprecedented accuracy and robustness by detecting the range, velocity, and angle of objects.

- Level transmitter: Enable high accuracy of <100 microns with ±15 microns at 3 sigma precision

- Radar for transport: Demonstrate robust detection of incoming moving vehicles at a distance of sixty meters, velocities up to 100 km/hour

- Drones: Benchmark for measuring power lines at greater than thirty meters and easily detect the difference between ground and water

- Building automation: Enable the detection and tracking of people both indoors and outdoors with environmentally robust sensing to rain, fog, smoke, or ambient lighting

LiDAR

Instead of using radio waves, the concept can be applied to light waves. Light detection and ranging (LiDAR) employ electromagnetic light pulses to determine the distance (ranging), angle, or velocity of objects. There are several pros and cons to using radar which may include:

Pros

- Accuracy

- Precision

- 3D imaging

- Immunity to external lighting conditions

- Relatively low computational power required

Cons

- Costly

- Potential difficulties in signal integrity if the light pulses are scattered due to environmental factors including rain, snow, and fog

- Colorblind

- Unable to give additional information that might not be related to velocity and shape

SONAR and Time of Flight (ToF)

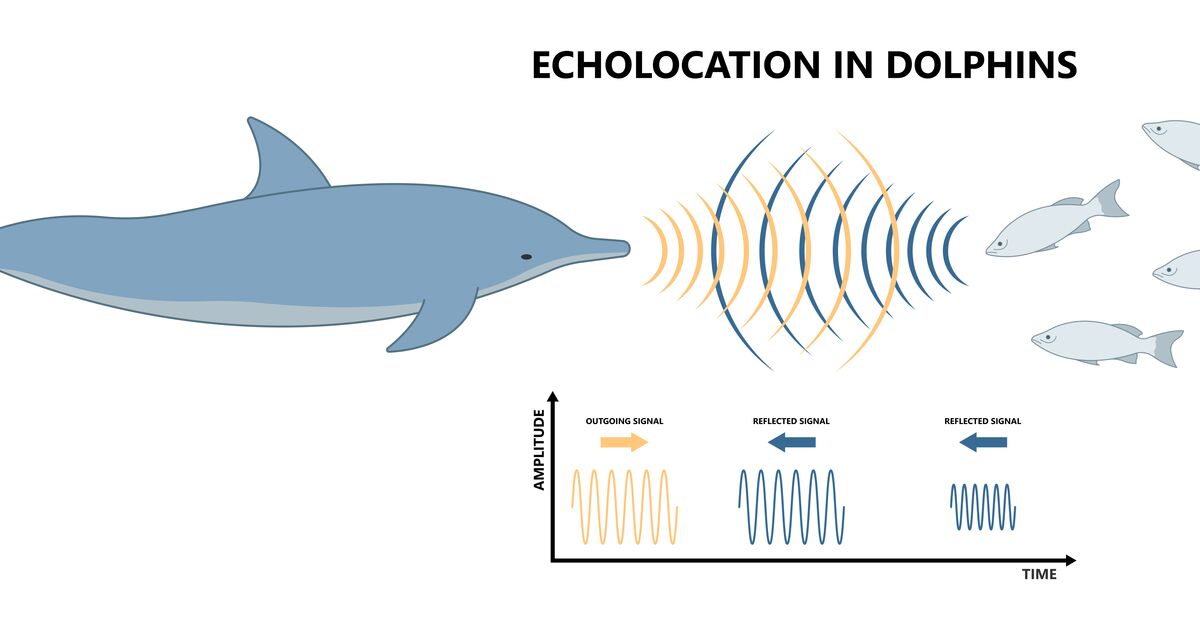

Most people know bats can find insects to eat at night through the use of echolocation (biological sonar). Bats emit a quick sound out to their context and then listen back to the faint echoes they received. These echoes help them locate and identify objects. Underwater dolphins employ a similar strategy (Figure 4).

Something akin to this happens with a time of flight (ToF) except it is not usually by sound but rather by electromagnetic radiation. ToF employs a measurement of the time taken by an object, particle, or wave to travel a distance through a medium. This information is then analyzed to measure concepts such as velocity or path length or to learn about the particle or medium's properties. ToF is helpful in robotic or HMI applications for things such as proximity sensing and gesture recognition.

Figure 4: Bio sonar sound helps animals such as bats and dolphins locate objects. (Source: rumruay/Stock.Adobe.com)

Time-of-Flight Sensor

Take by way of example the STMicroelectronics VL53L5CX 8x8 Multi-Zone Time-of-Flight Sensor (Figure 5). This product integrates a single-photon avalanche diode (SPAD) array, physical infrared filters, and diffractive optics (DOE) in a miniature reflowable package. The VL53L5CX offers multi-zone distance measurements with up to 8x8 real-time native zones and a wide 63° diagonal field of view. Each zone of the sensor allows measuring the distance to a target at up to 4 meters with a maximum frequency of 60Hz. With ST’s patented histogram algorithms, the VL53L5CX detects multiple objects within the field of view (FoV) and ensures immunity to cover glass crosstalk beyond 60cm.

Figure 5: STMicroelectronics VL53L5CX 8x8 Multi-Zone Time-of-Flight Sensor. (Source: Mouser Electronics)

These features allow the VL53L5CX to achieve the best-ranging performance in various ambient lighting conditions with various cover glass materials. The use of a DOE above the vertical-cavity surface-emitting laser (VCSEL) enables a square FoV to be projected onto the scene. The receiver lens focuses the reflection of this light onto a SPAD array.

Sensor Fusion: A Way Forward

One way forward is through sensor fusion. Sensor fusion combines multiple sensors and software

technologies for increased awareness of context. Some combination of cameras, Radar, LiDAR, ToF, along with other sensors, such as sensors employing microelectromechanical systems (MEMS) sensors can increase system performance and resiliency. Sensor fusion is a way to optimize benefits and reduce limitations.

Conclusion

We have covered different types of object detection sensors and examined the different advantages of each. As we learned, not all cameras are created equal. Much of it comes down to the application. We also learned that developers are making key advances with the development of new sensors that closely mimic the human eye’s ability to perceive changes in its visual field. In some cases, we’re using sensor technologies that go beyond what our eyes can see. Perhaps, one day our need for prescription glasses will become obsolete.