Seeing is Believing: Machine Vision

(Source: YuriHoyda/Shutterstock.com)

Similar to a human operator, an advanced machine, such as a robot needs visual context and awareness to make the right decisions and take the appropriate action. The beginning of a sophisticated machine's ability to see is rather straightforward, even though complex technology choices are involved. However, the image recognition is a complex subsystem with essentially all the design elements of an overall system. In the end, all the pieces need to come together for the vision sensing system to meet performance as well as cost and timing goals. As in most cases, the application and design goals dictate the right technology choices.

Looking Ahead

Perhaps one of the greatest limitations to advanced robotics is the vision system, especially when an extensive amount of visual data is collected and needs to be processed in real time. This challenge occurs while compelling needs exist for vision sensors in a variety of manufacturing systems. Market research companies are expecting cumulative annual growth rates of 8% to 9% in the 2015 to 2021 timeframe depending on the market research source.

According to one recent report, "Machine Vision Market worth 12.50 Billion USD by 2020", "The factors driving the machine vision market are increasing need for quality inspection and automation, growing demand for vision guided robotics system in the manufacturing plants, growing number of regulatory mandates in manufacturing industries, and growing demand for application-specific machine vision systems among the consumers." Thus, increasing automation increases the need for more capable robots and more capable vision systems.

Robots in Action

In fact, robots present some very interesting and diversified examples for application-specific vision systems. Four rather different applications bring out the diversity: humanoid, flying, medical, and plumbing robots.

Humanoid robots have the most visibility, in not only the technical press but in the general media. Some even have name recognition like Honda's ASIMO humanoid robot. Pursuing the duplication of human activity and ability to perform tasks, manufacturers from Sony, Samsung and others have amazed audiences with the capability of human-sized and, in many cases, human-looking robots to perform tasks from bending and lifting to playing soccer and even singing and dancing. These robots must constantly gather three-dimensional sensor data to move without falling, all while making several decisions. Fortunately, they have a reasonably large area to hold all the aspects of the vision system.

Figure 1: Robots in most applications need the ability to see before they take the next step. (Source: "Asimo at a Honda factory" by Vanillase. Available for use under the CC BY 3.0 license (creativecommons.org/licenses/by/3.0/), at Wikipedia.org.)

In contrast to an earthbound humanoid, flying robots or drones add the need for a small and light form factor to the performance requirements. Since the video surveillance system is the primary sensor for most drones, a variety of smart cameras that integrate the vision sensor, optics, and data processing may be required.

Machine vision is also used in the medical field. Wireless capsule endoscopy (WCE) is a diagnostic technique that allows doctors to view the gastrointestinal (GI) tract non-surgically instead of performing more complicated or risky procedures. However, it can take hours to view the resulting video of the intestine to look for anomalies associated with caner or other diseases. So video analysis using machine-vision techniques is another way that robotic systems have been adapted to assist with parsing the data from images captured by a "PillCam" as it travels through the GI tract. The PillCam, first by GivenImaging[i] in 2001, has been used on more than 1.2 million patients worldwide since then. The future of endoscopy as a disruptive technology[ii] is to advance beyond a passive pill camera, and provide a means for the doctor to control the movement of the pill so that particularly pathological areas can be observed. Whether or not this kind of endoscopy will be categorized as robotics remains to be seen.

Nevertheless, common system elements in all of these applications include the image sensor, software, and computing power to process and analyze the accumulated data.

Image Sensing

In all these applications, two technologies provide the vision sensing capability for digital image capturing: charge-coupled device (CCD) and CMOS-based imaging systems.

In a CCD sensor, light received at a photoactive region is stored as an electrical charge and then converted to voltage, buffered and output as an analog signal. In contrast, in a CMOS sensor each pixel has a photoreceptor for charge-to-voltage conversion. With integrated amplifiers, noise-correction, and digitization circuitry, the CMOS sensor delivers a digital output.

Similar to CMOS technology used in other system design areas, imaging CMOS has the advantage of ease of integration. It also consumes less energy and generates less heat. With their digital output, CMOS sensors can be controlled at the pixel level in more ways than CCDs. However, noise performance and sensitivity are lower than CCD sensors.

The unique capabilities of CCD versus CMOS image sensors have existed for many years. As the number of pixels and resolution capabilities have increased both image sensing technologies still compete in many markets. A detailed comparison for specifying and selecting the right sensor for the application can include spectral response and lighting requirements such as large area to ultra-small area with low light and high dynamic range. 3D applications in robots could provide CMOS an ultimate advantage as the technology integrates more functionality to deal with the complex application issues.

Determining the Results

The image sensor is the most obvious step towards achieving a vision system. The next phase requires complex software algorithms and high-speed data processing. Robots, drones and even cars that navigate autonomously need a 3-dimensional understanding of their surroundings. For 3D vision, several algorithms have been developed including Simultaneous Localization and Mapping (SLAM), Structure from Motion (SfM), Stereo Visual Odometry and others. The goal is high resolution and fast processing of the data. Ongoing efforts in many companies and organizations seek to improve today's capabilities.

Determining if an algorithm accomplishes its goals requires high-speed digital signal processor (DSP) technology capable of executing the algorithms. Today, one of the approaches for processing massive amounts of data involves cloud/server processing. However, increasingly powerful DSPs provide alternatives.

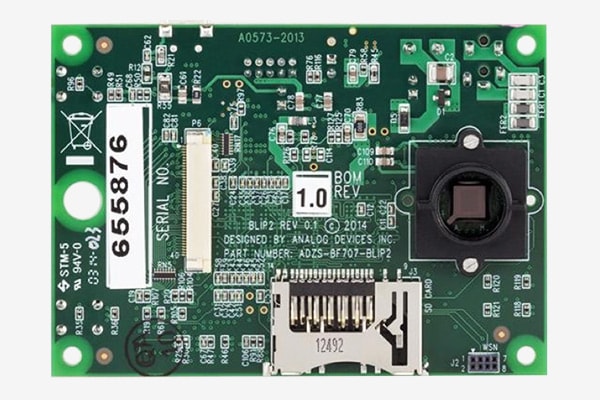

For example, DSPs such as Analog Devices Blackfin® 16-/32-bit embedded processors can handle complex image processing and also have software flexibility and scalability for other application aspects that could include audio, video, voice processing, multimode baseband and packet processing, control processing, and real-time security. The Blackfin® Low Power Imaging Platform (BLIP) addresses several indoor and outdoor image sensing applications.

Figure 2: The ADZS-BF707-BLIP2 BLIP hardware platform is delivered with a preloaded occupancy software module. (Source: Analog Devices)

Dealing with the vision system portion of the system is a sufficiently complex project and when any of these other system aspects come into play, development platforms, evaluation systems and evaluation boards simplify sophisticated DSP design. For example, the ADZS-BF707 EZ-KIT Lite® Blackfin Image Processing Toolbox includes image processing primitives designed to enable faster development of complex image or video processing solutions on the Blackfin DSP. Optimized to run on Analog Devices' Blackfin BF-5xx and BF-60x processor family, the primitive functions are part of a self-contained software module. Some applications can benefit from the MISRA-C compliant aspect of the library. In any case, demo code in the toolbox shows the usage of these primitives on the Blackfin DSP.

Figure 3: The ADZS-BF707 EZ-KIT Lite with its primitives simplifies the development of a vision system. (Source: Analog Devices)

Robotic vision system designers certainly have choices for the computing portion of the system.

In some applications, users may want to connect a camera streaming uncompressed data into a PC. For an application like this, Infineon Technologies EZ-USB FX3 SuperSpeed Controllers can provide the solution. Firmware in the chip converts the data from the image sensor into a format compatible with the USB Video Class (UVC) for the USB 3.0 Host PC. This conformance allows the camera to operate using built-in OS drivers and makes the camera compatible with Host applications.

An Easily Forgotten Design Detail: Connectors

Another critical aspect of any complex system, and especially an image recognition system, is the interconnections. The high-speed transmission of large amounts of data requires both shielded signals and power. Designed specifically for robotic applications, Molex CRC™ for Compact Robotic Connectors are a shielded rectangular I/O connector system that provides 7.0A to 15.0A of signal/power capability in up to 50 circuits. Targeting small industrial robotics and factory automation equipment, the panel-mounted system takes a fraction of the space of industry-standard heavy-duty connectors.

For easing assembly and limiting inventory to a single part number, the connectors are offered in kit form. The housings and shells accept either male or female inserts increasing design flexibility. All of these aspects can bring the various hardware elements of a vision system together, easily.

Figure 4: Connectors for robotic applications. (Source: Molex)

It all Comes Together

Robotic visual intelligence is recognized as an area where significant progress can lead to improved results in many existing applications and broaden the market for new uses. Including all of the system aspects, from vision sensing to algorithms, digital signal processing and even the connectors can not only make a difference - it could make the difference.

i Yingju Chen and Jeongkyu Lee, "A Review of Machine-Vision-Based Analysis of Wireless Capsule Endoscopy Video," Diagnostic and Therapeutic Endoscopy, Vol 2012, Article ID 41803

ii G. Ciuti, A. Menciassi, and P. Dario, "Capsule endoscopy: from current achievements to open challenges," IEEE Reviews in Biomedical Engineering, vol. 4, pp. 59-72, 2011.