Implementing TinyML: Introduction to Libraries, Platforms, and Workflows

Image Source: NicoElNino/Stock.adobe.com

By Mark Patrick for Mouser Electronics

Published August 22, 2023

Getting started with TinyML can be a daunting experience. Over time, machine learning (ML) has evolved and gained popularity in edge-based industrial applications. Traditionally, the process of selecting a suitable neural network model, training the model, and tweaking it for deployment is complex. However, help is on hand with the increasing number of artificial intelligence (AI)/ML resources specifically designed for TinyML applications.

In this article, we review and explain the popular methods used by TensorFlow Lite, Edge Impulse, and Fraunhofer AIfES in making the training and deployment of an AI model on a resource-limited microcontroller a straightforward process.

Deploying Edge Applications

In an industrial motor vibration monitoring application, developers will start the design process by collecting vibration sensor data from tens of different motors, typically using an accelerometer. Each motor should be at a different stage of potential failure so the developers can collect representative data. Likewise, they need to record data from a motor in perfect working order, followed by selecting a suitable algorithm. The algorithm, essentially lines of code, can discover the rules of what constitutes vibration signatures that depart from a perfect operational state. The development team trains the algorithm to make predictions from the sensor data without understanding the relationship between and complexity of individual vibration sensor data. The ML algorithm becomes a system model and produces predictions of a motor's operational condition. It is important to stress that while an ML model is a beneficial resource, its inference makes predictions, estimates, and approximations based on the training data rather than exact answers.

The rise in edge-based inference is gaining popularity for several reasons. First, there is no need to stream data back to a cloud server for inference. Inference at the edge removes latency and bandwidth costs. The added benefit is that privacy is not an issue because no data is moved or stored on cloud servers. The microcontrollers selected for these edge applications typically consume very little power, with many capable of long-term battery operation, easing installation complexities. To put it into context, many high-performance GPUs used for scientific inference applications consume hundreds of watts of energy, perhaps 500 watts in some cases. A microcontroller's typical power consumption profile is milliwatts or even down to microwatts.

Resources to Speed Development and Deployment

As the concept of inference at the edge has gathered momentum, several resources have emerged that are specifically designed for use with low-power microcontrollers.

TensorFlow Lite for Microcontrollers

One of the established ML resources is TensorFlow. Initially developed by Google in 2015, it is an end-to-end open-source platform for ML offering a comprehensive, flexible ecosystem of tools, libraries, and community resources. TensorFlow permits developers to build and deploy a diverse range of ML, such as scientific, medical, and commercial applications.

The TensorFlow Lite for Microcontrollers open-source library, launched in 2017 by Google, was explicitly designed to accommodate the growing interest in conducting inference on low-power microcontrollers with only tens of kilobytes of memory. TensorFlow Lite's core runtime binary library fits in just 18kB of memory on an Arm® Cortex®-M3 processor and can run several different basic neural network models. It can run on 'bare metal' since it has no dependency on operating systems, C or C++ libraries, or direct memory allocation requirements. The library is written in C++ 11 and requires a 32-bit microcontroller.

The models that TensorFlow Lite for Microcontrollers employs are converted, microcontroller-optimised representations of those available within the TensorFlow training environment. TensorFlow Lite for Microcontrollers is well supported, extensively tested on the Arm Cortex-M series, and ported to other MCU architectures, such as the Tensilica-based Espressif ESP32 series and the Synopsys ARC processor core series. Developers can find a comprehensive guide to implementing TensorFlow Lite for Microcontrollers at https://www.tensorflow.org/lite/microcontrollers.

In addition to TensorFlow Lite for Microcontrollers, another optimised set of library resources, TensorFlow Lite, is available for Android, iOS, and embedded Linux-based single-board computers such as the Raspberry Pi and the Coral Edge TPU.

Here is the workflow process using TensorFlow Lite for Microcontrollers (Source: https://www.tensorflow.org/lite/microcontrollers):

- Train a model:

- Generate a small TensorFlow model that can fit your target device and contains supported operations.

- Convert to a TensorFlow Lite model using the TensorFlow Lite converter.

- Convert to a C byte array using standard tools to store it in a read-only program memory on device.

- Run inference on device using the C++ library and process the results.

Edge Impulse

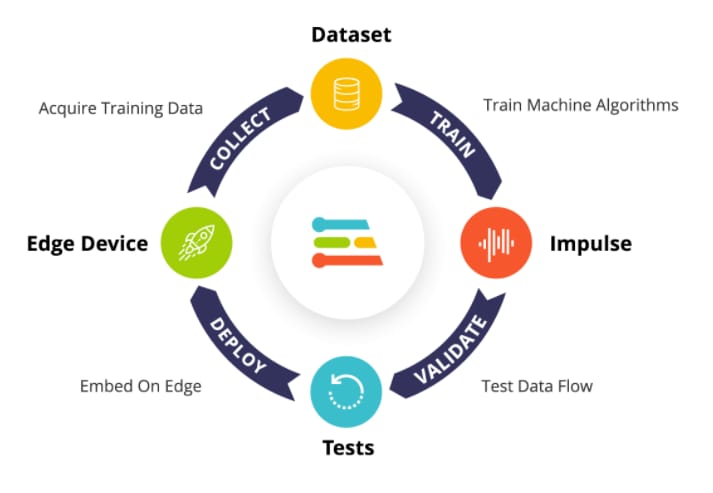

Edge Impulse provides a complete ML training-to-deployment platform (Figure 1) for embedded microcontroller devices. Estimated to be used on over 20,000 projects worldwide, the goal of Edge Impulse is to get an embedded ML project from inception to production in the shortest period, speeding time-to-deployment from years to weeks. The emphasis is on using embedded devices equipped with various sensors, including audio and vision, and deploying them in large volumes. Target embedded devices range from resource-constrained, low-power microcontrollers to more capable microprocessors and CPUs. Supported model types include TensorFlow and Keras.

Figure 1: Edge Impulse provides a complete comprehensive platform for microcontroller ML development. (Source: Edge Impulse)

Typical ML applications for Edge Impulse include predictive maintenance, asset tracking and monitoring, and human and animal sensing in industrial, logistics, and healthcare markets.

Edge Impulse subscribes to the code of conduct of the Responsible AI Institute. It requires users to sign a license that their application does not involve criminal, surveillance, or defence-based usage.

Figure 2 illustrates the model-testing feature of Edge Impulse. In this example, an STMicroelectronics MCU is used with a digital microphone to recognise two words: house and zero. The model-testing output shows the probability percentage for each word, background noise, and other unclassified words.

Figure 2: The model-testing feature of Edge Impulse. (Source: Edge Impulse)

Fraunhofer AIfES

German research institute Fraunhofer has developed the AI for Embedded Systems (AIfES) framework with a platform-independent library in C. AIfES is open-source and available under the GNU General Public License (GPL) arrangement. It uses the standard library GNU GCC, which significantly eases implementation and runs on virtually any hardware device, from 8-bit microcontrollers to more capable smartphone processors and desktop computer CPUs. A version of the AIfES library is also available for innovators basing their designs around an Arduino platform.

AIfES is free to use for private projects and requires a license agreement for commercial applications.

Figure 3 showcases the functionality and compatibility of the AIfES library across various platform types. The Fraunhofer AIfES library is compatible with popular Python ML frameworks such as TensorFlow, Keras, and PyTorch and is similar in process.

Figure 3: The Fraunhofer AI for Embedded Systems (AIfES) library function. (Source: Fraunhofer Institute for Microelectronic Circuits and Systems)

Next Steps to TinyML Implementation

In this article, we've highlighted three ML libraries and platforms suitable for the rapid development and deployment of edge-based TinyML applications. The prospect of developing an embedded ML IIoT application for edge deployment is a daunting task. However, the resources and workflows greatly simplify the complexities of neural networks and the need for an in-depth understanding of data science principles.

The next steps in this process involve learning about different microcontroller platforms and evaluation kits supported by the development resources mentioned here. With relatively few extra components, a simple low-power microcontroller evaluation board, a sensor, and the resources highlighted, you can have a working TinyML demonstration in less than an hour.