Wireless Removes the Last Wires from Home Audio

The last bastion of wired connections in home audio has fallen, as wireless technology replaces wires in high-definition home audio systems. However, the question of sound quality remains in debate.

Wireless communication pervades nearly every aspect of home entertainment. The last bastion of “the wire” may well be the loudspeakers in home theater and audio systems as owners succumb to the allure of not having to run cables between floors or walls and under carpets. However, unlike connecting a computer, tablet, or phone to Wi-Fi where “quality” is defined by higher data rates and the ability to reliably stream an HD movie, audio adds another metric: sound quality. As judging this metric is highly subjective, a system can sound great to one person, and yet horrid to another presumably more “discerning” listener. Thus the question of which transmission medium from a technical perspective delivers the best sound. Not surprisingly it’s a matter of some debate.

Setting the Stage

For audiophiles, that small percentage of the population who take umbrage with anything corrupting audio fidelity, which over the years has even included the effect of different types of speaker cables, the Holy Grail is transparency. This is defined as zero degradation or variation of the sound from the original recording or even further to the analog sounds before they were mixed, recorded, and digitized. This ideal scenario has not yet been achieved but it’s certainly not because scientists, engineers, and the entire entertainment industry aren’t trying.

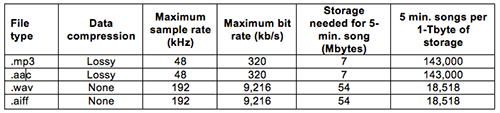

To explore this, it would be valuable to look at data compression, probably the most controversial aspect of digital audio reproduction. Data compression compromises sound quality but is a necessity because an uncompressed data file containing multi-channel sound is immense. The .wav file used by Windows and the .aiff file used by Apple on the Macintosh (but not on iTunes, iPhones, or iPods) are examples of uncompressed audio coding formats. A .wav file, for example, consumes 7 – 10 Mbytes of data for every minute of audio, so a 5-min. song requires at least 35 Mbytes of storage and an album at least 500 Mbytes. Obviously, this makes this format and the other types of uncompressed data unusable by consumer devices. Lossy and lossless formats and their required storage per 5-min. of audio shown in Table 1).

Table 1: Lossy and Lossless Data Format Compared

It’s safe to say that if no method was discovered for compressing digital files to reduce their size while maintaining acceptable levels of audio quality, the digital audio (and video) world as we know it would not exist. Fortunately, in 1894 an American physicist named Alfred Mayer (1836 –1897) discovered an interesting phenomena pertaining to how humans perceive sound. This phenomena, called auditory masking, is far too complex for an extended discussion, but as it relates to digitizing recorded audio, it allows some sonic elements in a sound track to be eliminated without dramatically affecting its quality as perceived by humans, reducing file size enormously.

More than 80 years after Mayer’s discovery, scientists and engineers throughout the world worked for many years to create more and more sophisticated coding schemes that provided a better compromise between audio quality and file size. After fierce competition between many developers and a run-off between their techniques, the Motion Picture Experts Group (MPEG), the final arbiter of the formats used by the music and film industries, chose a method championed and heavily promoted by Philips.

The runner-up, which was similar (and later proven to be better) was developed by a group of scientists and engineers at Germany’s Fraunhofer Institute lead by Karlheinz Brandenburg. These academics were not industry-supported lobbyists, and they were ultimately out-gunned. The chosen format was named MPEG Audio Layer 2 (or MP2 for short). Brandenburg’s technique is what ultimately became MP3. To find out how the losing format ultimately “won”, I heartily recommend you read Stephen Witt’s recent book “How Music Got Free: The End of an Industry, the Turn of the Century, and the Patient Zero of Piracy.” For a technical discussion of how MP3 works, a paper presented by Brandenburg at the AES 17th International Conference on High Quality Audio Coding is a great start.

High-performance “lossy” data compression schemes such as MP2 and MP3 were an enormous breakthrough, as they allowed hundreds or thousands of songs and other content to be stored in compact consumer products like “MP3 players” (later almost completely displaced by Apple’s iPod) as well as any product, from mobile phones to USB thumb drives, that employ solid-state memory. Moreover, it allowed files to be shared over the Web even at relatively slow data rates, as file sizes were small enough to keep transfer times short. The MP3 file also arguably played a role in the rise of peer-to-peer file sharing a la Napster, Kazaa, and Bearshare. The MP3 file also made possible peer-to-peer file sharing, widespread music piracy such as Napster, Kazaa, Bearshare, and others, and thus enormous harm to the music industry.

For most people, MP3 audio is “good enough” but as it is not a 100% reproduction of the original, audiophiles continue to denigrate it. This also applies to the MPEG-4 format used by Apple consumer audio products and iTunes, Sony, and other companies, and it is considered by some to produce better sound quality than MP3. Audiophiles claim that what’s “left out” is important for maintaining fidelity and their benchmark for comparison is ironically the vinyl record that as an analog medium has no loss or compression.

You be the Judge

Wikipedia offers a handy way to listen to three versions of the same acoustic guitar solo encoded in three formats, so you can judge for yourself. The first is an uncompressed .wav file, the second one uses a lossy codec called Ogg Vorbis generally considered comparable in sound quality to an Advanced Audio Coding (AAC) developed by AT&T Bell Laboratories, Fraunhofer, Dolby Laboratories, Sony Corporation, Nokia, and others, and is considered the successor to MP3 (and is used by Apple, Sony, Nintendo, and others), and an MP3.

Not surprisingly, audiophiles are also wary of wireless transmission of since it also has the potential to degrade fidelity, depending on whether the compression-decompression (codec) employed is “lossless” or nearly so. As wireless home audio systems increasingly rely on Bluetooth®, Wi-Fi, and various manufacturer-proprietary formats for this purpose, the subject has given rise to a wide variety of lively discussions.

Bluetooth and Wi-Fi: Ubiquitous

Most wireless audio systems use Bluetooth or Wi-Fi, primarily because they are highly-developed standards for which an immense amount of hardware is available, and because there is no single standard for wireless audio. Bluetooth and Wi-Fi have been pressed into service for wireless audio but they were created for entirely different purposes and consequently neither has a set of requirements specifically dedicated to audiophile-quality wireless delivery of sound.

hat said, Bluetooth is built around a series of profiles, each dedicated to a specific purpose, one of which is Bluetooth audio streaming or A2DP (Advanced Audio Distribution Profile). It supports MPEG 3 and MPEG 4 and the Advanced Audio Coding (AAC) and High-Efficiency Advanced Audio Coding (HE-AAC) used by Apple, Sony, and other companies, as well as Adaptive Transform Acoustic Coding (ATRAC) developed by Sony. A2DP also supports proprietary codecs like apt-X developed by Cambridge Silicon Radio (now CSR), one version of which uses a “near-lossless” technique. As Bluetooth is not lossless, it is not by definition an “audiophile-approved” solution. However, when Bluetooth is employed, sound quality can be very good. Texas Instruments’ PCM186xx audio ADC with universal front end is one example of a device built to be compatible with Bluetooth and even off-loads some digital signal processing functions. Digital sound processing is a fact of life in mobile electronics.

In contrast, Wi-Fi can reliably transmit any information whose information bandwidth is within that of a Wi-Fi channel, which is 80MHz for IEEE 802.11ac and 40MHz for IEEE 802.11n, so it can accommodate all audio and video formats including HD video and even 4K Ultra and High Dynamic Range (HDR) video streaming. Wi-Fi also has far greater range than Bluetooth and can be used not just to connect a home theater system but a whole-house audio/video system as well.

The limited range of Bluetooth is adequate for connecting amplifier to its speakers but isn’t suited for serving more than a single room, which is why it is primarily used for streaming audio and video from a device like a smartphone to a powered speaker, for example. Unlike Bluetooth, Wi-Fi supports lossless codecs, which significantly adds to its audio appeal. As many people already have Wi-Fi, it is easy to integrate a wireless audio system into the existing mix of connected products.

Figure 1: Texas Instruments' PCM186x audio front-end devices are meant for home theater, Bluetooth speakers, automotive head units and microphone array processors. PCM186x is 3.3V-ready, does not require an external PGA, and eases compliance with European Ecodesign legislation. It has a 7.8mm X 4.4mm footprint.

If We Only Had a Standard….

This is what the Wireless Speaker and Audio (WiSA) Association is hoping to achieve: a standard designed exclusively to serve high-definition wireless audio. WiSA began to take shape in 2011 when a group of audio equipment manufacturers decided to collaborate to create a wireless-audio-centric standard. Any manufacturer of speakers or other audio or consumer electronics equipment, retailers, and installers, can join WiSA, which gives them access to the group’s compliance specification and reference designs. Like Wi-Fi and other standards, any product with the WiSA seal must comply with all elements of the WiSA 1.0 Certification Test Specification.

A WiSA-certified component uses the Dynamic Frequency Selection (DFS) channels between 5.2 and 5.8GHz that were previously reserved for weather and military applications. (Older wireless devices operate in the same band used by many other home products, such as baby monitors, microwaves, and cordless phones.) However, as part of the Obama Administration’s effort to open up more of the electromagnetic spectrum currently allocated to the government to commercial use, the 5.2 and 5.8GHz band is now available on a shared basis. Sharing means that products operating at these frequencies cannot interfere with existing services, which can be achieved by monitoring available channels and moving to another channel if a primary service is operating on the original one. If this sounds familiar, it’s because the same restrictions were applied to the “white space” frequencies created when over-the-air television broadcasting transitioned from analog to digital transmission in 2009.

However, WiSA takes this a step further by moving to another channel if any type of interference is detected, and it does so without interrupting what’s “playing”, a necessary step in creating a pristine environment for high-definition audio. WiSA-certified systems use the same transmission scheme as IEEE 802.11a Wi-Fi except that WiSA speakers that receive the signal from the audio system are not resident on the network, which also helps to avoid interference.

A WiSA transmitter can broadcast up to eight channels of audio (7.1 surround sound) to as many as 32 speakers in a room or a whole house. The speakers reproduce uncompressed (that is, lossless) 24-bit audio using the native sampling rate of the media being played, at a sampling rate up to 96 kHz. System latency is 5ms, which is less than most TV video processors, making WiSA well suited for video games as well. It’s also much less latency than Bluetooth, at 40ms for aptX® Low Latency for Bluetooth and 150ms for standard Bluetooth.

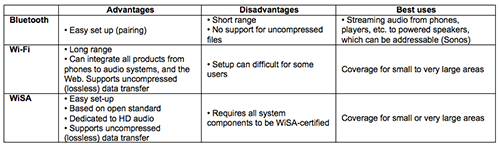

The WiSA Association boasts that a WiSA-certified link between two devices will not adversely affect sound quality and “will sound as good as copper wire, if not better.” As the audio is transmitted digitally to the amplifiers, which are customized by the manufacturer to match the speaker cones and their acoustic enclosures, the WiSA Association says certified wireless speakers can actually sound better than wired versions of the same speaker. The advantages and advantages of Bluetooth, Wi-Fi, and WiSA are shown in Table 2.

Table 2: Bluetooth, Wi-Fi, and WiSA compared

Other Alternatives

In addition to systems using Bluetooth, Wi-Fi, or certified to the WiSA specifications, there is also Apple’s proprietary AirPlay protocol that allows wireless streaming of audio, video, device screens, and photos, between various devices. The company licenses the technology to other equipment manufacturers to allow their products to be compatible with Apple's. For audio streaming, it transcodes data streams using the Apple Lossless codec at a 44.1kHz sampling rate in two channels that have AES encryption.

Wireless speaker manufacturer Sonos uses a wireless peer-to-peer mesh network called SonosNet that allows connections to Sonos amplified speakers throughout a house in separate zones. The latest version, SonosNet 2.0, integrates Multiple Input Multiple Output (MIMO) technology with IEEE 802.11n hardware to enhance performance. There are many other alternatives offered by various manufacturers, most of which use Wi-Fi for transmission.

Summary

Almost every promising new capability in the electronics industry starts out as a fragmented group of competing, sometimes proprietary approaches, and ultimately one reigns supreme. There are many examples, from Hewlett-Packard’s rather unsuccessful attempt to customize the General Purpose Interface Bus (GPIB), the battle between competing wireless standards in the U.S (won by LTE), the current contest between champions of various wireless standards for supremacy in connecting anything and everything (that is, the Internet of Things), and of course the legendary battle between VHS from JVC and Betamax from Sony that JVC eventually won only to be outdone by optical media.

The situation in the home audio community is far less contentious, and it’s arguable that most people probably have no idea (or perhaps care) what format wins as long as what comes out of the speakers is listenable. This scenario could conceivably continue for a long time until one open-source standard is adopted by most or all audio manufacturers. Until then, Wi-Fi will dominate in most home audio situations and especially in whole-house environments while Bluetooth reigns supreme in streaming form portable devices to powered speakers.

There is certainly a case to be made for a single standard for high-definition wireless audio, at least for systems in which only the highest level of audio quality is acceptable. It would go a long way toward satisfying buyers of these systems, as well as audio consultants, installers, and manufacturers. The rest of us could simply accept whatever the manufacturer of our chosen sound system uses, and we thankful we no longer have to contend with wires.