Human Machine Integration

Source: Hikari Pictures/stock.adobe.com

The evolution of human machine interaction has progressed from simple, manually operated tools requiring physical skill to sophisticated electronic systems blurring the lines between humans and machines. Initially, tools like winches and catapults extended human capabilities, with interfaces directly manipulated by hand or foot. This era of simple human machine interfaces (HMIs) enabled human progress for millennia, with even complex machinery like steam locomotives being controlled through physical levers and knobs. The advent of electricity marked a significant leap in HMI design, introducing buttons, switches, and visual indicators, allowing for more nuanced control and communication with machines.

Today, the advancement of technology challenges the once distinct boundaries between humans and machines, leading to a new era where integration and interaction with machines are more seamless and interconnected, reflecting both the opportunities and challenges posed by such close human-technology integration.

Usefulness of an HMI

The utility of HMIs has significantly increased with the advent of character and graphic displays, alongside the evolution of touchscreen technology. Microcontrollers and processors have enhanced machines' ability to store preferences and streamline operations, allowing for quick and efficient mode selections. The development of motor controls and force feedback has brought new precision levels to machine operation. However, the allure of innovative technology does not necessarily equate to effectiveness. Early virtual reality (VR) systems, for instance, faced challenges with real-time head tracking, leading to user discomfort due to the disconnect between expected and actual visual feedback.

Effective HMIs prioritize intuitiveness and minimal training requirements, enhancing their evolutionary usefulness. Advances such as brighter displays and touchscreens have transformed user interaction, although challenges like recalibration and limited touch capabilities in early versions were notable. The shift to projected capacitance touch technology significantly advanced HMI functionality, exemplified by the widespread adoption of smartphones and tablets. These devices' intuitive nature allows for rapid learning and operation without extensive training, even among children, who often outpace adults in mastering new technologies.

A critical aspect of HMI design is ensuring operator comfort and minimizing fatigue over extended use periods. Modern interfaces, particularly in tablets and smartphones, are designed for prolonged engagement, though this raises concerns about potential addiction. Additionally, HMIs like heads-up displays in aviation demonstrate the importance of sustaining operator focus and comfort during lengthy sessions. Design considerations must also address the risk of repetitive stress injuries, underscoring the importance of ergonomics in interface design to support user health and productivity across various occupational contexts, from assembly line work to office settings.

Safety of an HMI

Modern VR systems represent the forefront of technology embraced by the mainstream, with several key players dominating the market and the potential for more as augmented reality (AR) systems gain popularity. Both VR and AR technologies excel in creating immersive experiences, enhancing learning and training environments, revolutionizing entertainment, and offering new ways to interact with digital content.

While presently used most commonly for gaming, there will be more use of these technologies in industrial control, factory automation, and repair centers for complex machines. Advanced sensors and detectors allow the HMI to know where the hands are while supporting new advanced gestures like spread or dive (Figure 1). Even while immersed in a virtual environment, handheld control switches and buttons can be used through wireless controllers.

Figure 1: VR and AR systems can allow better visualization of complex systems, which is useful for training, repair, and service of machines. (Source: Gorodenkoff/stock.adobe.com)

Advancements in technology, particularly with accelerometers and precise head tracking, have mitigated the dissociative lag issues in virtual environments, thereby enhancing safety. However, challenges remain, such as navigating invisible obstacles with immersive headsets, necessitating boundary alerts through visual and auditory signals. Omnidirectional treadmills with sensors offer a solution for safe movement in virtual spaces by tracking user motion. AR presents a safer alternative by overlaying digital information onto the real world, allowing users to actually see obstacles. This technology is particularly beneficial in specialized fields like aerospace, where AR can simplify complex tasks such as jet engine repair by highlighting components, with the promise of evolving to support 3D projections for more intuitive interactions.

Gesture-recognition technologies in AR headsets enable precise control and interaction, enhancing task efficiency in various applications, including complex machinery repairs guided by audiovisual cues. These systems not only improve task performance but also enable flaw detection beyond human capability. However, for applications in industrial control and remote military operations such as piloting drones, HMIs must balance sensitivity and robustness, ensuring reliability under conditions like nearby explosions or unintentional operator movement, with safety mechanisms to prevent unintended actions.

Economics of an HMI

To implement an advanced HMI, key sub-assemblies must be in place. Thanks to the explosive growth of cell phones and tablets, high-resolution, low-cost displays are readily available and cost-effective. The same is true with accelerometers and small, low-cost, high-resolution video cameras. High-volume applications can leverage these technologies at lower costs than ever before.

VR interfaces commonly utilize thin-film transistor liquid crystal display (TFT LCD) displays, whereas AR technologies prefer organic light-emitting diode (OLED) displays for their transparency, flexibility, and lack of backlight requirement. Despite higher costs and less maturity of current OLED technology, TV and smartphone industries are rapidly adopting the technology, indicating future cost reductions and improvements in availability.

In the realm of military and aerospace, HMI designs prioritize durability and longevity. However, not all applications require such extended mean time between failures (MTBF). A skilled design team is crucial in balancing durability with cost-effectiveness and reparability to prevent field failures. Meanwhile, the practice of planned obsolescence by some manufacturers aims to encourage periodic upgrades to newer models, highlighting a balance between product longevity and innovation cycles.

Current State of HMIs

Tablets and phones have increasingly replaced desktops and laptops for tasks such as personal browsing, streaming media, basic office functions like email and document editing, and even in some business settings where touchscreen-enabled laptops blend traditional computing with the portability and intuitive interface of mobile devices.

However, there are applications where this will not be the case, such as CAD and CAE designs. For example, large, high-resolution displays make designing a printed circuit board (PCB) easier, less stressful, and less error-prone. Don’t be surprised if a clever CAE maker starts to offer a VR-based PCB or chip layout tool that takes advantage of advanced imaging and interfaces such as speech recognition.

Speech recognition has come a long way, but almost anyone using speech-to-text applications knows it is not yet perfect. This will continue to improve as advanced digital signal processing (DSP) technology is being used to filter out background noises and discern subtleties and will continue to improve to the point of accepted reliability once AI hardware starts making decisions and considering context.

The Invisible Touch

Integral to modern and future HMIs is the ability to get physical feedback from a virtual machine. The emerging field of haptics provides this functionality but not yet economically at a large scale. Haptic feedback represents a leap in how we interact with virtual systems, offering tactile sensations that mimic real-life experiences. While traditional interactions often rely on the tactile response of buttons or switches, newer technologies like haptics, voice commands, and gesture recognition are broadening the scope of how we can interact with machines without the need for physical contact, enhancing the user experience in virtual HMIs.

For example, some haptic systems already on the market can provide positive physical feedback for pushing a virtual button via a glove that uses micro solenoids, air pressure, or fluid transfers (Figure 2). Multi-finger haptics can be useful when typing on a virtual keyboard. There are even ultrasonic emitter arrays that can direct wavefronts to a specific point in the air, making you feel the click when nothing is there.

Figure 2: Advanced haptic gloves precisely detect and respond to hand and finger movements and can produce tactile clicks, pressures, and even temperatures. (Source: Александр Лобач/stock.adobe.com)

Figure 2: Advanced haptic gloves precisely detect and respond to hand and finger movements and can produce tactile clicks, pressures, and even temperatures. (Source: Александр Лобач/stock.adobe.com)

Some applications, like remote surgery, require tactile feedback beyond simple clicks, necessitating precise force feedback to simulate actions like the resistance felt when using tools like scalpels. This immersive experience demands low latency and accurate feedback to ensure surgeons can apply the correct pressure. Beyond gloves, products like high-end gaming chairs offer simulated g-forces for realistic driving or flying experiences.

Neural Interfaces and Beyond

Research and development in neural interfaces have made significant strides over the past decade, offering new mobility and function possibilities for individuals with disabilities caused by accidents or congenital conditions. Initially, surface sensors detected nerve impulses or muscle movements to control prosthetic limbs. Advances in DSP technologies and computing power have enabled more complex, multi-sensor control of artificial limbs with training.

A pivotal development in neural interface technology was the direct insertion of electrodes into the body, including the brain, to treat disorders like Parkinson's disease and to facilitate heart pacing. A notable early achievement was a bio-silicon interface created in the 1970s that connected slug neurons to integrated circuits (ICs), leveraging slugs' large neurons for pioneering neural interface research.

This groundwork led to sophisticated multi-sensor/stimulator interfaces capable of directly connecting ICs to the human brain. A landmark breakthrough occurred in 2004 when a color-blind artist received an implant enabling him to perceive colors beyond the normal visual spectrum, from infrared to ultraviolet. This not only compensated for his achromatopsia (complete color blindness) but also enhanced his sensory capabilities, marking a significant step toward augmenting human abilities through technology.

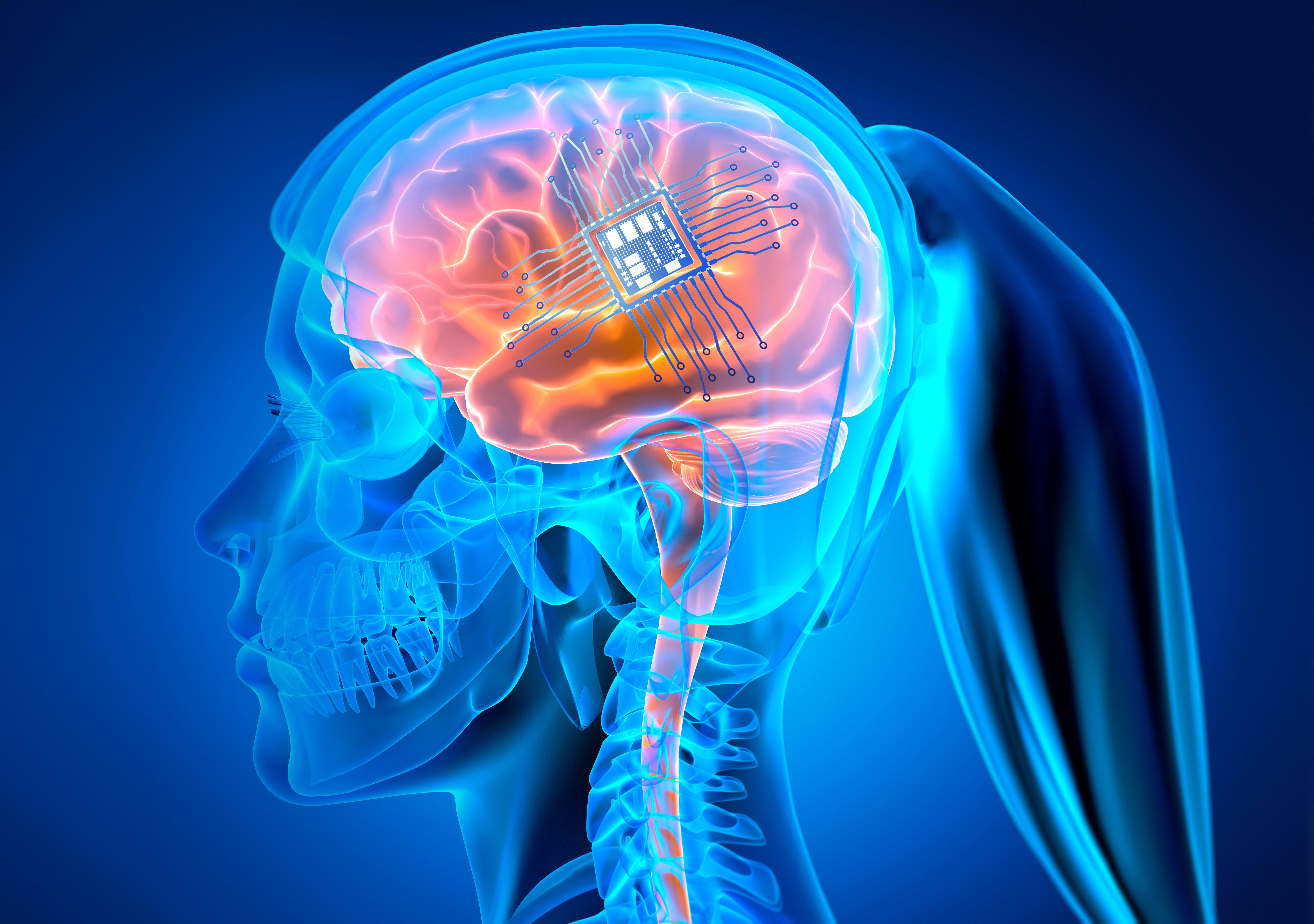

In more recent advancements, Neuralink Corp. developed a chip to interface with brain areas responsible for motor control, allowing users to operate devices such as cell phones and computers through thought. This technology is based on detecting neuron firing patterns, with potential future applications that include motor function restoration, pain management, and sensory enhancement, such as enabling vision in the infrared spectrum or hearing at ultrasonic frequencies (Figure 3). Research into decoding neural activity is ongoing, with possible applications ranging from mood and health monitoring to preemptive identification of violent behaviors, showcasing the broad and evolving capabilities of neural interfaces.

Figure 3: Artist concept of a chip implanted into the brain. (Source: peterschreiber.media/stock.adobe.com)

Other Uses for Brain-Implanted Interfaces

Neural interfaces promise transformative applications across entertainment, medical detection, intervention, and mood regulation. These technologies are set to extend beyond traditional uses in machine control and medical prosthetics, potentially revolutionizing how we interact with digital environments and manage health. Advanced neural interfaces could enable fully immersive entertainment experiences, simulating sensations entirely within the user's mind and heralding a new era of virtual and augmented reality.

In healthcare, neural interfaces might soon detect and preemptively address medical conditions such as seizures, heart attacks, and strokes, thanks to embedded sensors capable of administering medications and alerting healthcare providers automatically. Additionally, these interfaces could offer innovative approaches to managing mood swings and depression by monitoring brain chemistry in real time to maintain mental well-being.

While the broad potential of neural interfaces is clear, it also underscores the need for careful consideration of privacy, consent, and autonomy to ensure their ethical development and application.

Conclusion

Advancements in HMIs have progressed from basic tools to advanced systems that blur the lines between technology and biology. Early mechanical controls evolved into sophisticated electronic interfaces, offering precise control and feedback. The development of neural interfaces enhances mobility for those with disabilities and paves the way for direct brain-computer communication. State-of-the-art HMIs now provide immersive experiences and improved control, with future innovations promising to further integrate technology into human experiences, from sensory enhancements to medical interventions. As HMIs advance, they promise to expand human capabilities and improve life quality, pointing towards a future where technology and human life are seamlessly connected.

[1] Barbara Symonds Beltz. “The Salivary System of Limax Maximus: Morphology and Peripheral Modulation of Buccal Neurons by Salivary Duct Afferents.” PhD diss., Princeton University, 1979. ProQuest (7928466).

[2]

Michelle Z. Donahue, “How a Color-Blind Artist Became the World’s First Cyborg,” National Geographic, April 3, 2017, https://www.nationalgeographic.com/science/article/worlds-first-cyborg-human-evolution-science.

[3]

Denise Chow, “Behind Elon Musk’s Brain Chip: Decades of Research and Lofty Ambitions to Meld Minds with Computers,” NBCNews.com, February 4, 2024, https://www.nbcnews.com/science/science-news/neuralink-elon-musk-science-behind-rcna136352.