Context-Driven Models for Smarter Forecasts

(Source: decorator/stock.adobe.com; generated with AI)

Most forecasters are statistical or deep models trained on the target series alone. That approach works when future data points are expected to look like past ones. However, they falter when the signal depends on outside forces or if historical data contains anomalies that require explanation to understand their impact, such as retail promotions, weather, outages, or policy changes. Retail sales jump during sales promotions, energy demand moves with temperature and holidays, and operational metrics respond to planned interventions. A model that learns from numbers alone will miss these real-world driving forces. Large-language models (LLMs) trained for advanced reasoning can change this workflow. By combining the numerical data with a concise domain brief, these models can integrate real-world context, produce forecasts, and explain the “why” behind them. This blog covers when pure time series methods struggle, how context-aware LLM forecasters work, what to include as additional context, as well as practical strengths and limitations.

When Data Isn’t Everything

Time series forecasting models perform well with sufficient data and when the past contains most of what the future will look like. However, because these models learn patterns from the numbers alone by implicitly capturing elements like seasonality and trend, they struggle when important drivers live outside the series. This can happen for various reasons, such as a short history of available data, future events expected to affect predictions, or even past activities that caused anomalies in the data that may not count as outliers. In these cases, while the model tries to infer the “rules” of predicting this time series from the data, it will be unable to. With insufficient data, such models cannot determine if particular data points should be given more weight. For instance, if mid-year and end-of-year data are crucial but only one year of monthly data is provided, it will be impossible to derive that knowledge. Equally, if a new marketing campaign or promotional event is known to drive sales by a certain percentage, or if a failure occurs that will cause predictions to drop, models that only learn from time series data will be unable to account for these factors. The other major pitfall of classical forecasting models is their blackbox nature, which means that it’s not clear how these models make decisions from the data given, and the reasoning behind certain predictions is not easily known. When users seek to understand why a model made a specific forecast but are unable to gain this knowledge, their trust in the results is often called into question.

Context Is Key in LLM Forecasting

To take into account these various kinds of domain knowledge when forecasting, developers can repurpose LLMs to provide predictions based on prior data as well as contextual information. The method involves selecting an appropriate LLM, establishing a baseline prompt template, and injecting relevant historical data with the specific context required to forecast well for that target. Following what a subject matter expert (SME) might do, the process of forecasting involves several logical steps, like reviewing the data, understanding how it might move as a result of the given domain knowledge, and then applying both to form a prediction for a future time point. As such, the models that can replicate this type of behavior the best tend to be reasoning models, such as Gemini Pro 2.5 and GPT o4-mini or GPT-o3, which typically perform some kind of chain of thought to establish and then work through the steps of a process. Alternatively, more advanced LLMs, like Llama-3.1-405B-Inst, can also perform well with different contexts.

Contextual information to be paired with numerical data can include elements like “intemporal” information, such as constraints on values or seasonalities that have more extended periods than the duration of available data. Historical facts that cannot be inferred from the series should also be included. For example, sensor maintenance that caused a dip, or a work stoppage that suppressed volume, should be tagged as spurious so the model does not extrapolate the anomaly unless a similar event is expected to recur. Additionally, causal information should be provided, such as known interventions with timing and expected magnitude or prior effect sizes. “Campaign A starts in October and typically lifts orders 8–12 percent for 2 weeks” is an example of an actionable statement that can guide the forecast beyond pattern matching.

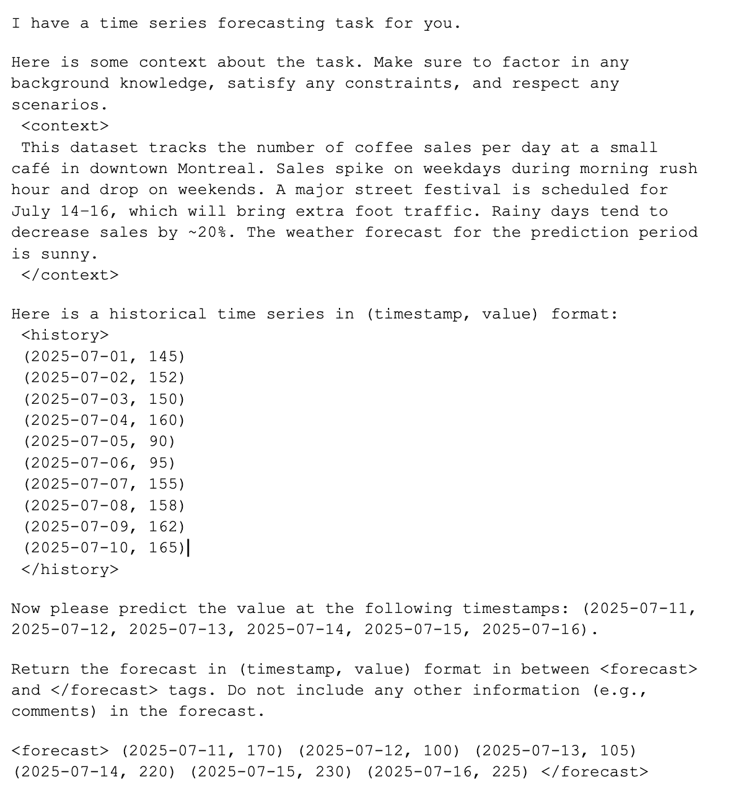

Here is a simple contextual prompt structure:

- Describes the series and target horizon

- Lists historical data as dates and values, providing any additional numerical data as needed (

Figure 1 ) - Adds all relevant context required to understand the rules of forecasting, including information that affects the forecast but is not visible in the data

- Describes how the output should be formatted and if the results should be consumed by some upstream process (

Figure 2 )

Figure 1: Example of a templated prompt for context-is-key LLM forecasting. (Source: Author)

Figure 2: Example of the filled-in template with relevant context and historical data. (Source: Author)

With this contextual model structure, the model’s job is to reconcile the numeric history with the brief, then surface a prediction and the reasoning behind it.

The Reality of Context-Aware LLM Forecasting

Context-aware LLM forecasters excel when rules are describable and the domain has known external drivers, such as retail with promotional calendars, energy with weather forecasts and holidays, or operations with scheduled outages. They are especially attractive when you need interpretability, since reasoning-oriented models can return a natural-language rationale. However, on the downside, these can fail more significantly than other models, for example, by producing values that under or overshoot the ground truth by up to 500 percent,[1] as researchers found. Additionally, cost and latency matter. Bigger models often reason better, but they also consume more tokens, so picking the smallest model that meets accuracy and interpretability needs is important. Moreover, combining traditional forecasting methods with LLM-based ones can also limit the impact of errors, a common technique known as ensembling.

Conclusion

Pure time series models are efficient pattern learners, but they underperform when known, external factors drive the future. Context-aware LLM-aided forecasting closes that gap by encoding rules, constraints, and dated interventions to align with the numeric history to produce both a prediction and a defensible rationale. The approach is strongest when clear causal notes with concise and relevant background information can be provided, and when interpretability is part of the requirement. While the accuracy of these models can dramatically exceed that of models trained on numerical data alone, they can fail more significantly, so it is recommended to pair a capable numeric model with a well-briefed reasoning model to get forecasts that are both smarter and easier to trust.