Dexterous Robotics: The Fast-Track to Mastery

Breakthrough Robotics Approach Unlocks Rapid Mastery of Dexterous Behaviors

(Source: TRI Press Room)

Unveiling an electrifying breakthrough, Toyota Research Institute (TRI) introduced an innovative approach that catapults robotics from the mundane pick-and-place universe into a realm where robots swiftly grasp and perfect novel, dexterous behaviors. While robots, traditionally limited and simplistic, are inching towards intelligence, there’s always been a significant spark missing—until now.

Using TRI's ground-breaking method, robots don't just learn; they observe, absorb, and master complex manipulative tasks overnight, acquiring the agility to wield tools, pour liquids, and even peel vegetables, all without a single change in code or the need for exhaustive programming (Figure 1). Say goodbye to the days of laborious machine learning with millions of training cases and embrace a dawn where robots are not just smart, but astonishingly skilled and impressively adaptive.

Figure 1: “Teaching” the robot cooking skills that it will learn in one afternoon and be able to perform by morning the next day. (Source: TRI)

The New Approach

Russ Tedrake, VP of Robotics Research at TRI, launched a mission in 2016 to catapult robots to unprecedented dexterity. Early hurdles led to a game-changing question: "What's the Achilles' heel of our new-age robotics?" The response? An over-reliance on simulation. Enter the Intuitive Physics Project, a daring initiative to unlock human-like abilities beyond the confines of virtual worlds.

Instead of leaning on simulations, the team embraced a radical strategy: imitation learning with a modern spin. Fast-forward to 2022, a bold summer intern introduced a ground-breaking tweak—diffusion models. The models allow efficient learning of reactive policies from a small set of provided training data.

After a set of demonstrations is collected for a particular behavior, the robot learns to perform that behavior autonomously. At the heart of this capability is a generative AI technique called diffusion. For example, a teacher demonstrates a small set of skills, and Diffusion Policy1 generates robot actions based on sensor observations of human movement and natural language. The demonstration runs for several hours after the teacher’s original demonstration. The use of diffusion provides three key benefits:

- It applies to multi-modal demonstrations whereby human demonstrators teach behaviors naturally and do not worry about confusing the robot

- It is suitable to high-dimensional action spaces so that the robot can plan forward in time, avoiding myopic, inconsistent, or erratic behavior

- It enables stable, reliable training of robots at scale with confidence they will work without hand-tuning or hunting for golden checkpoints

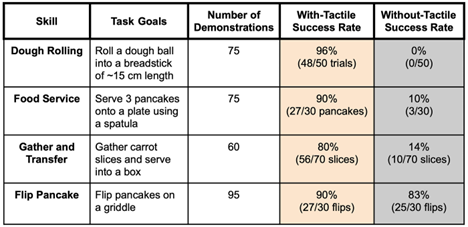

A sense of touch also allows the robots to learn more easily. TRI Soft-Bubble sensors2 consist of an internal camera observing an inflated deformable outer membrane and go beyond measuring sparse force signals. This allows a robot to perceive spatially dense information concerning contact patterns, geometry, slip, and force. The robots perform well when they can “feel” an interaction with the environment (See Table 1).

Table 1: A real-world performance comparison between tactile-enabled and vision-only learned policies. (Source: TRI)

The Platform

Imagine a world where robots can whisk up your breakfast or flip a pancake effortlessly. That's the horizon TRI is reaching for with its innovative approach. By leveraging a variety of teleoperation tools—from the humble joystick to sophisticated bimanual devices—they're rewriting the rules of robot education. With the magic of cutting-edge generative AI, they've empowered a robot to master over 60 intricate skills, like culinary arts and gadget handling, all within a fleeting afternoon! Siyuan Feng, a dynamo at TRI, sheds light on the process: while they guide the robots, they've built resilience into their learning DNA, readying them for real-world hiccups.

But it's not just about receiving commands. These robots possess an elegant dance of torque-based Operational Space Control, ensuring they move with grace and safety. And, excitingly, TRI plans to share this dance with the world, hinting at an open-source release.

Simulation, once sidelined for hands-on action, is making a dramatic comeback. TRI is meticulously crafting an immersive curriculum in their virtual "robot kindergarten", both in the tangible world and their digital playground. They've already chalked up over 60 behaviors, and the end goal is robots that can surprise us, pulling off feats they were never explicitly taught..

With their sights set high, TRI is gearing up to unveil hundreds of new robot skills by the year's end, aiming for a staggering thousand by 2024. And the cherry on top? Their simulation expertise is sowing the seeds for a robot revolution, where a lesson learned by one becomes wisdom for all. The future, it seems, is a dance of metal and AI.

What’s Next?

Ben Burchfiel, the head of the dexterous manipulation team, shares, "We're pushing the boundaries with scalable algorithms, drawing inspiration from the leaps in language and image tech." Yet, the world of robotics is still hungry for a rich and diverse data tapestry, something TRI is passionately crafting. Their vision? To weave large behavior models that blend deep understanding with tactile brilliance, empowering robots to intuitively devise new nimble actions. This journey promises to not only redefine robotics but also to ignite fresh innovations in automation, machine learning, and the intimate dance between humans and machines.

Sources

1. Chi, Cheng, Siyuan Feng, Yilun Yilun Du, Zhenjia Xu, Eric Cousineau, Benjamin Burchfiel, and Shuran Song. Diffusion Policy: Visuomotor Policy Learning via Action Diffusion. Accessed June 1, 2023. https://arxiv.org/abs/2303.04137.

2. Soft Bubble Grippers for Robust and Perceptive Manipulation. Accessed December 29, 2023. https://punyo.tech/.