Wireless Technology Couples with Next-Gen AudioDesigns for Musicians

By Jon Gabay for Mouser Electronics

Humans have an uncanny ability to master and refine a technology even before we understand it. For example, after many generations of designing pianos, the shape evolved to follow the pink noise curve because it sounded better to discerning human ears. We didn't understand why. We just did, and it was right.

It was the wonders of our hearing that gave us the ability to do so. Humans are refined sensor systems, of which hearing is an integral part. There are no more discerning ears than those of a musician, and designing modern day audio equipment to satisfy a musician's ear can be especially challenging. Products designed for musicians need features and flexibility. For example, some designs need to minimize distortion and others need to distort a signal to controlled level - as any modern guitar player will tell you.

Analog to Digital Conversion

All audio technology and designs lived in the analog realm for several generations of music and musicians. Instruments were mechanical in nature and used air, striking, or oscillating excitation energy to create sounds and notes. Recorders and players were analog, as well.

Initially used for low bandwidth voice, the gramophone used needles to vibrate and wave-guides to direct and amplify (Figure 1).

Figure 1: Mechanical principles alone allowed the storing and playback of voice and music. There was no electric or electronics for instrumentation either. Simple as it was, this technology changed the world of music.

As technology progressed, wider bandwidth recording and playing devices caught the ear of the music world, and that marriage forever changed the way we appreciate and enjoy music.

Electronics revolutionized this yet again. Early tube amplifiers allowed the first electronic instruments and amplifiers. The Theremin (Figure 2) was among the first electronic instruments and used capacitive coupling to a musician's hand proximity's to control pitch and volume.

Figure 2: The Theremin was among the first electronic instruments based on tube technology that could also use tubes to amplify the signal in real time. (Source: Wikipedia)

The ability to capture and store analog data electronically gave musicians the recording industry. Wire and magnetic tape allowed electrified signals to be stored and replayed non-destructively. These capabilities drove the creation of lower-cost, smaller-sized, higher fidelity players for the masses. It also spawned new generations of electronic instruments such as keyboards and guitars.

Let's Get Digital

The earliest voice streams were single-bit, pulse width modulated signals that could be sent over wires and be recognized and deciphered by a human ear. This analog form of digital signaling quickly taught us that wider resolution and faster speeds meant clearer signals. From 4 to 8 bits, then 16, and now 32-bit wide, digital words can house tremendous dynamic ranges of signal levels.

Coupling this with faster sample and playback rates means a designer has direct control of frequency range. Wider audio bandwidth means that not only primary notes can be stored and reproduced, but also their rich harmonics.

Designing for higher frequency response (faster sampling rates), higher resolutions (wider bit samples), and higher signal purity (low distortion) are just some of the unique constraints engineers face. Equalizers, filters, effects, amplification, mixing, and recording are but a few examples of modern audio needs.

Modern day high-speed A/D's and D/A's, as well as analog and digital filters, amplifiers, and mixers have proven themselves and continue to develop and improve. Device makers know their competition is listening.

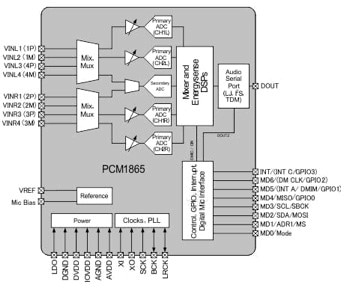

A modern example of an audio A/D converter with flexibility and functionality is the Texas Instruments PCM186x/PCM186x-Q1 Audio ADCs (Figure 3). These come in 2- and 4-channel versions, and contain both hardware and software controlled gain stages. The hardware gain amplifier uses a fixed gain, selectable 20 or 32 dB analog front-end suitable for microphone interfacing.

Figure 3: Single ended or differential analog signals can feed the PCM186s/PCM186x-Q1 multi-channel, universal A/D audio front end with internal DSP. (Source: Texas Instruments)

The device's digital mixing functions can accept up to eight analog inputs and use an internal DSP processor to perform zero cross detection and clipping suppression. They also feature a range of front-end signals levels from mV levels to 2.1 Vrms. The levels can feed the single-ended inputs or a differential stage for higher common mode noise reduction.

An internal PLL generates all control timing and sampling timing for all the channels' 48 KHz sampling rates. This is higher than CD quality but lower than most recording mixers. Still, the 110 dB, 24-bit audio quality does make this suitable for microphone and voice quality front ends that only use limited frequency demands.

Thanks for the MEMs

As audio technology couples more to wireless technology, device manufacturers are using newer MEMs-based techniques to help craft more noise-immune solutions to the presence and possible interference of local sources of RF.

Parts such as the AKUSTICA AKU24x and AKU44x family of microphones use MEMs technology to provide high-performance audio with immunity to radio, RFI, and EMI. Featuring HD-voice quality performance, the integrated MEMs microphones use a pulse-density modulated data stream for direct digital output. The robust Faraday cage construction allows these modules to mount directly inside a microphone or to a PCB for handheld and portable devices.

More DSP Please

To-date, combined DSP functionality has been integrated into the front ends, but these are normally dedicated fixed-function DSP blocks that only perform specific functions. Many musical devices such as keyboards or effects pedals need higher horsepower. Programmable and adaptive DSP processors and parts like the Analog Devices ADAU145x Sigma DSP Digital Audio processor are fully programmable for enhanced sound processing. Family members of this part are capable of processing up to 48 channels through its four serial inputs and output ports with up to 32-bit samples at up to 192K-samples/sec rates.

Internal clocks derive all critical frequencies using an internal PLL that minimizes noise and assure accuracy. A stand-alone functionality can be implemented using a self-boot mode from serial EEPROM. This allows the internal memory, core processor, and serial interfaces to be loaded with optimized code and run autonomously. You can also implement peripheral-style functionality since the part can function as a master or a slave over I²C or SPI.

The 6- to 10-bit A/D channels use a sigma/delta conversion technique to over-sample local microphones or even perform noise cancellation. Fourteen general-purpose, digital I/O pins can be used to scan buttons, drive LEDs, or interface to other digital parts.

The Wireless Factor

Wireless communications is on the front lines of advancing music technology. Any modern musician or recording engineer will tell you the elimination of wires is a blessing, as long as it doesn't interfere with the sound quality.

Wireless spread spectrum technologies provide reliable noise-tolerant, high-speed, high-bandwidth data links for musicians needs. For example, instruments, players, and recorders can send and receive high fidelity, multi-channel audio information and coexist wirelessly in the same proximity.

Spread spectrum also has distinct advantages over older, narrowband modulated signaling techniques. First, designers are allowed to use higher transmit power levels with spread spectrum than they can with narrowband. Narrowband modulation signaling is typically limited to 10µW of power, whereas spread spectrum can use up to 5W, allowing for longer distances and higher reliability of signal reception.

Spread spectrum is also more immune to noise. Amplitude modulation leaves signals sensitive to impulse noise like sparks or distant lightning strikes. Frequency Shift Keying (FSK) and Phase Shift Keying (PSK), for example, are sensitive to frequency stable noise sources such as motors or even fluorescent lights.

Complex protocols like Ack/Nack can request retransmits of packets that had interference for re-assembly of pure data streams, but this can add delays. Instead, noisy packets can be discarded if any new and unexpected noise sources emerge. The amount of time spent in any one band can mean that real-time signal hits can be relatively imperceptible.

As a result, this real-time exchange of often live and streaming performance data means low latency and non-perceptible delays in the signal chain. In addition, new features and functions can be added to old equipment letting it do what was not possible or feasible in the past. For example, a sound man can walk around a performance center during a live concert and adjust the mixing board virtually using a wireless handheld device.

While some spread spectrum protocols are being used for audio application for the masses, they may not be good enough for musician's needs. For example, Bluetooth technology has been widely adopted and embraced for wireless speakers, headphones, and end-user devices, but has limited fidelity for a musician's or recording engineer's needs.

A lot has to do with the types of compression and decompression (CODECs) encoders and decoders that are used. Early on, Bluetooth used Low Complexity Sub-Band Coding (SBC), but more recently, the AptX CODEC (by CSR) has gained acceptance and is touted as being able to provide loss-less CD quality sound. But, this is not sufficient for audio engineers and musicians who typically want 192KHz sampling rates of at lease 24 bits in width.

This means that either proprietary spread spectrum protocols or higher data rate protocols (like Wi-Fi) are needed, and either approach can be used effectively.

Time-to-Market Constraints

Standardized and established protocols like Wi-Fi are best for fast time-to-market needs. Wi-Fi can coexist pretty well in a crowded airspace and there are a number of modules and design tools are readily available to help designers get up to speed quickly. While modules can save design time, they do come at a slightly higher cost. Still, the module makers take care of all the certifications world wide, and it is their responsibility to keep current with specifications and adherence to regional and global specifics.

The use of modules does not prevent a design team from designing their own solution. Concurrent engineering allows production to begin with a module while in house designs are refined, tested, and certified. This is the way to go if production volumes dictate the need, but for most small- to medium-volume production runs, modules work best.

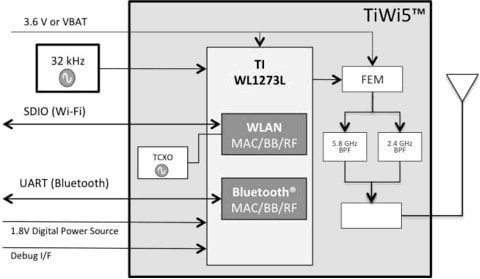

An example of a good performing pre-certified module with enough bandwidth and high enough data rates to satisfy most any audio task is from LS Research. The LS Research TiWi5 Transceiver Module can pass up to 65Mbits/sec for highest end audio and control (Figure 4). The 1.8V to 3.6V surface mountable module measures only 18mm x 13mm x 1.7mm high and operates in the 2.4 and 5GHz ranges supporting 802.11 a/b/g/ and n modes. The serial I/O helps reduce I/O pin counts to host micros keeping PCB areas smaller.

figure 4: pre-certified modules such as the tiwi5 module include phy/mac and protocol handling. serial interfaces such as the uart, are supported by virtually every microcontroller and microprocessor, simplifying interfacing, test, and debug. (source: ls research)

Other Routes

designing your own wireless solutions and protocols is best for high volume productions. here, parametric search engines can be a helpful tool. Link Portals can also provide good quality and up-to-date information.

Other things to keep in mind are frequency response ranges. For musical instruments, this can be very important. For example, a bass player needs lower frequency response than a flute. Many wireless links for example, start at 80Hz, yet some start at 10Hz.

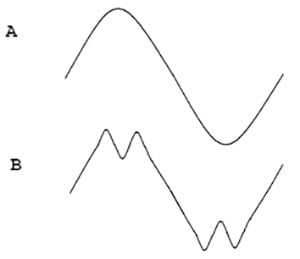

And if you do have the bandwidth, then use it. A non-compressed waveform will be truer than a compressed waveform (Figure 5). Most will never hear the difference, but if your competitor provides a cleaner sound than you, it may make the difference between a product success or a product failure.

Figure 5: An uncompressed waveform (A) is the purest and the human ear of a refined listener can hear the subtle but present distortions that a compressed waveform (B) carries with it. (Source: author)

Conclusions

Music and audio are becoming increasingly important and modern technology is addressing the increasing demands of the refined listener, as well as creators of music. Several good manufacturers, parts, and tools are ready to be called upon for next generation designs.

Fortunately, an overlapping subset of engineers are musicians, and the converse. It seems we find comfort and creativity within a structured and logical domain. Often times-constrained in ways, but freeing nevertheless.

Engineering sound requires sound engineering. The human ear can distinguish between 1% and .1% resistor tolerances in an R-2R D/A ladder for example. Listen to your customers or they won't be listening to you.