Why the First True AI Revolution Will Happen on the Factory Floor

CES 2026 Reveals the Paradigm Shifts Coming to Industrial Operations

(Source: Siemens)

Most of the artificial intelligence (AI) conversations that reach the public are centered around apps, chat tools, and productivity software. But that's not where the real pressure is right now.

The true test for AI is happening in factories where this technology doesn't get the benefit of the doubt. Systems control physical equipment, where errors are visible in real time and can be expensive. Decisions can affect uptime, product quality, cost, and most importantly, safety. When something goes wrong in a manufacturing facility, it is noticed immediately.

That is why factories are becoming the most revealing test of whether AI actually works. The industry is not focused on whether AI demos well or sounds impressive, but whether it can operate under pressure alongside machines in a human environment, with real constraints.

These industrial interests in AI were on full display at the 2026 Consumer Electronics Show (CES) in Las Vegas, where many companies presented concrete ways AI is starting to change the industrial workflow. Major topics of discussion included digital twins being used to make decisions before physical changes, adaptive production systems, and companies testing their approaches in their own facilities.

This is what it looks like when AI leaves a slide deck and enters real operations.

Why Factories Come First

At this year’s event, the “factory-first” theme came through clearly during Siemens CES keynote, which was more a series of conversations rather than a presentation.[1] Roland Busch, Siemens’ CEO, was joined on stage by leaders from NVIDIA, PepsiCo, Microsoft, and others. The theme throughout these discussions was consistent: the most immediate use of AI is not in consumer applications, but in industrial systems.

The reasons for this intrigue are many. Factories already run on structured operational data. Industrial equipment is built with sensors, controllers, and monitoring systems. Machines, conveyor belts, robotics, quality checks, and even power systems generate data as part of normal operations. Additionally, in manufacturing, data is tied directly to throughput, quality, maintenance, and cost. Part of the reason digital twins have taken off is that they don’t have to invent data; they can just mirror it. A survey of 75 industrial executives found that nearly 44 percent of industrial organizations surveyed have already implemented digital twins, and another 15 percent are planning deployment because they directly feed from the physical systems they represent.[2]

Factories also operate in closed loops. Machines respond to feedback from sensors, controllers adjust, and operators intervene when something abnormal occurs. That loop of measuring, responding, and adjusting keeps production stable. AI can be added to environments that already rely on these types of systems. As a result, there are different expectations for industrial AI than consumer or office tools. AI in a factory doesn't get to be vague and cryptic. In a factory, the data flows are structured and tied to outcomes, so the consequences of getting it wrong are immediately visible.

“When an AI enters a physical system, it stops being a feature. It becomes a force. A force with direct real world impact,” said Busch.

Digital Twins Aren’t Just Visualization Tools

Digital twins came up repeatedly at CES, but not in the typical abstract way they often get discussed. The focus wasn't on pretty 3D models or virtual replicas for the sake of visualization, but on how companies are already using digital twins as decision-making tools. While it may seem like a subtle difference, traditionally, a digital twin helped explain why things happen, but now major companies are using it to test what should happen next.

Part of what makes that possible is compute resources. Several speakers during the keynote explained that large-scale graphics processing units (GPUs) are changing what engineers can realistically simulate. Instead of running a few scenarios due to time and cost concerns, teams can visualize hundreds of thousands of variations before anything starts in the real world. They can make layout changes, process tweaks, throughput adjustments, and test failure points.

Busch was joined on stage by Athina Kanioura, Chief Strategy and Transformation Officer at PepsiCo. Kanioura shared how PepsiCo uses digital twins in manufacturing, warehousing, and logistics to redesign its existing operations.[3]

In one case, PepsiCo used a digital twin of one of its US facilities that was a little outdated and worn down. The company was able to simulate layouts, workflows, and throughput to upgrade the facility without an entire redesign. According to Kanioura, their chosen approach helped drive a 20 percent efficiency improvement in just three months. That translated to an estimated 10–15 percent reduction in capital expenditure by avoiding unnecessary physical redesigns. This example shows how digital twin technology can impact not just future factories, but existing facilities with legacy layouts and pressing constraints.

The difference between a digital twin as a visual aid and a digital twin as an operational tool is that while the former may look impressive, the latter can change how decisions are made on the factory floor.

The "Factory as a Robot"

Rather than treating AI as something bolted onto machines, some companies are framing the entire factory as an intelligent system.

During the keynote discussions, Busch was joined by NVIDIA CEO Jensen Huang, who referred to this new industrial concept as the “outside-in AI system,” in which the entire factory will be one giant robot that orchestrates other factory robots. Instead of thinking about smart machines as operating independently, the concept focuses on a coordinated system managing an entire operation. So, the factory isn't just housing automation; it becomes the automated system.

This idea eliminates some of the futuristic autonomy associated with AI and makes it more about the day-to-day coordination. By integrating AI, systems can control which lines run harder when demand shifts, where work should move if a machine is lagging or down, or adjust production when materials arrive late. Most of this still depends on people monitoring the dashboard and making judgment calls, but the “factory as a robot” model pushes more of that functionality into software.

At CES, Busch shared that Siemens plans to launch its first fully AI-driven adaptive manufacturing site in Germany in 2026. This facility will consist of an AI layer sitting on top of Siemens’ existing automation and operations software, consuming real-time data from digital twins and using that information to influence how production runs. The goal, according to Busch, is to add an “AI brain” on top of software-defined automation, so that “you can really control the manufacturing” in real time. Siemens will also be working on this concept with Foxconn in the US to create factories that will build AI supercomputers.

The AI Factory

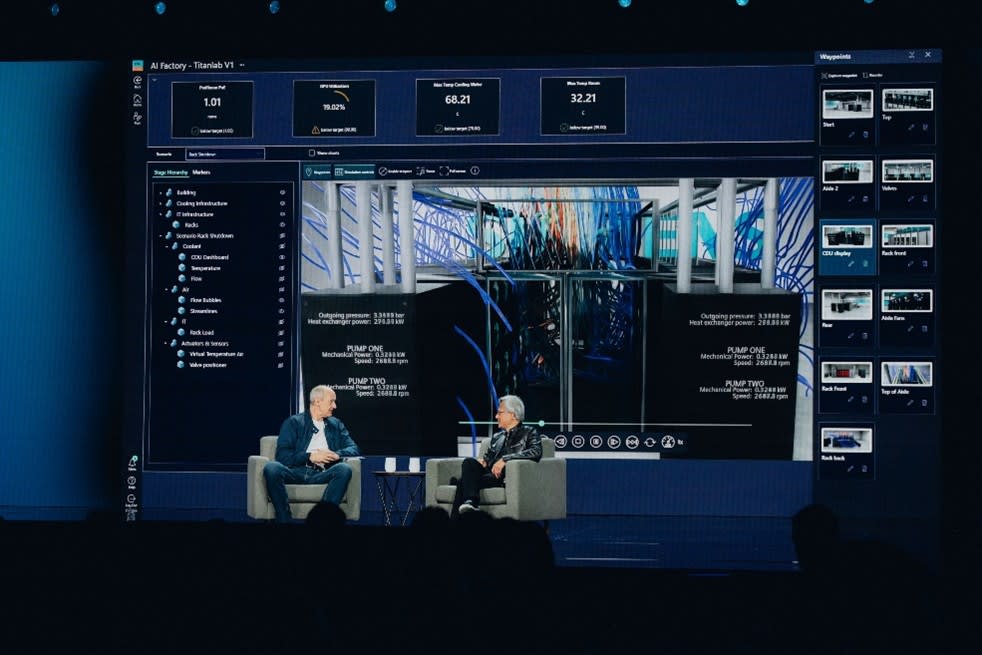

The conversation at CES surrounding robot factories lent itself to another term that kept coming up during the Siemens-NVIDIA discussion: the “AI factory” (Figure 1). Since it's not a standard industry term yet, the duo took time to explain. The AI factory is a massive facility being built to train and run large AI systems. They're not traditional data centers.[4] They're purpose-built sites with high-density compute resources, extreme power demands, and complex cooling requirements. Huang described these as gigawatt-scale projects that can cost US$50 billion.

Figure 1: Siemens and NVIDIA will jointly develop a repeatable blueprint for next-generation AI factories. (Source: NVIDIA)

“If you're building something that costs $50 billion, you want to make sure there is absolutely zero scheduled delay. You're not going to be able to tolerate design changes,” said Huang.

Siemens and NVIDIA are focusing their collaboration on developing a repeatable blueprint for how these facilities should be designed and operated. The goal is to think about power, cooling, automation, grid integration, and operations before anything is built—not after.

Conclusion

The AI that is heading to the factory floor looks nothing like the AI most people talk about. It's not meant to sound flashy and it needs to be more constrained because if this AI doesn't work, systems fail. Companies want to see AI improve throughput, reduce scrap, avoid downtime, and keep people safe.

The industrial environment is the prime place for AI to show what it can do. It will operate within real systems with substantial consequences, and factors such as power limits, latency, reliability, physical risk, and cost pressure will need to be considered. This reality is very different from consumer AI.

As CES 2026 showcased, companies are already using tools like digital twins and adaptive manufacturing to make decisions before making physical changes, with examples like PepsiCo showing measurable results. Some are going further by treating the factory as an intelligent system, where software coordinates operations instead of simply monitoring them.

The factory is where AI is being asked to mature in profound ways.

[1]https://www.youtube.com/watch?v=R4Wm6YdoZSs&t=1s

[2]https://www.mckinsey.com/capabilities/operations/our-insights/digital-twins-the-next-frontier-of-factory-optimization

[3]https://www.pepsico.com/newsroom/press-releases/2025/pepsico-announces-industry-first-ai-and-digital-twin-collaboration-with-siemens-and-nvidia

[4]https://nvidianews.nvidia.com/news/siemens-and-nvidia-expand-partnership-industrial-ai-operating-system